Implement platform protection

- By Yuri Diogenes and Orin Thomas

- 11/5/2022

Azure has native platform security capabilities and services that should be leveraged to provide the necessary level of security for your IaaS and PaaS workloads. In this sample chapter from Exam Ref AZ-500 Microsoft Azure Security Technologies, 2nd Edition, you will learn about the skills needed to implement advanced network security.

One of the main aspects of cloud computing is the shared responsibility model, where the cloud solution provider (CSP) and the customer share different levels of responsibilities, depending on the cloud service category. When it comes to platform security, Infrastructure as a Service (IaaS), customers will have a long list of responsibilities. However, in a Platform as a Service (PaaS) scenario, there are still some platform security responsibilities; they are not as extensive as when using IaaS workloads.

Azure has native platform security capabilities and services that should be leveraged to provide the necessary level of security for your IaaS and PaaS workloads while maintaining a secure management layer.

Skills in this chapter:

Skill 2.1: Implement advanced network security

Skill 2.2: Configure advanced security for compute

Skill 2.1: Implement advanced network security

To implement an Azure network infrastructure, you need to understand the different connectivity options available in Azure. These options will enable you to implement a variety of scenarios with different requirements. This section of the chapter covers the skills necessary to implement advanced network security.

Overview of Azure network components

Azure networking provides built-in capabilities to enable connectivity between Azure resources, connectivity from on-premises networks to Azure resources, and branch office to branch office connectivity in Azure.

While those skills are not directly called out in the AZ-500 exam outline, it is important for you to understand these concepts. If you’re already comfortable with your skill level, you can skip to “Secure the connectivity of virtual networks,” later in this chapter.

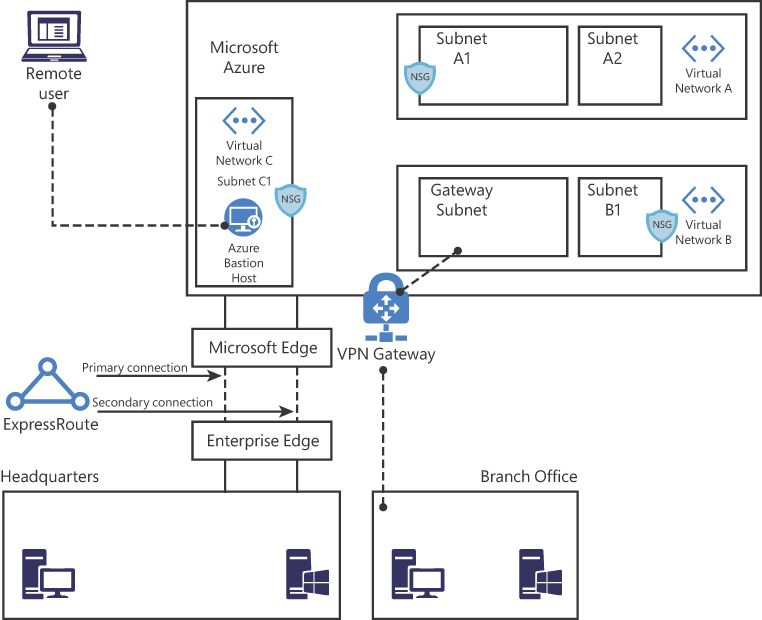

To better understand the different components of an Azure network, let’s review Contoso’s architecture diagram shown in Figure 2-1.

FIGURE 2-1 Contoso network diagram

In Figure 2-1, you can see Azure infrastructure (on top), with three virtual networks. Contoso needs to segment its Azure network in different virtual networks (VNets) to provide better isolation and security. Having VNets in its Azure infrastructure allows Contoso to connect Azure Virtual Machines (VMs) to securely communicate with each other, the Internet, and Contoso’s on-premises networks.

A VNet is much like a traditional physical, on-premises network where you operate in your own data center. However, a VNet offers some additional benefits, including scalability, availability, and isolation. When you create a VNet, you must specify a custom private IP address that will be used by the resources that belong to this VNet. For example, if you deploy a VM in a VNet with an address space of 10.0.0.0/24, the VM will be assigned a private IP, such as 10.0.0.10/24.

Notice in Figure 2-1 that there are subnets in each VNet in Contoso’s network. Contoso needs to segment the virtual network into one or more subnetworks and allocate a portion of the virtual network’s address space to each subnet. With this setup, Contoso can deploy Azure resources in a specific subnet, just like it used to do in its on-premises network. From an organizational and structure perspective, subnets have allowed Contoso to segment its VNet address space into smaller segments that are appropriate for its internal network. By using subnets, Contoso also was able to improve address allocation efficiency.

Another important trio of components is shown in Figure 2-1: subnets A1, B1, and C1. Each of these subnets has a network security group (NSG) bound to it, which provides an extra layer of security based on rules that allow or deny inbound or outbound network traffic.

NSG security rules are evaluated by their priority, and each is identified with a number between 100 and 4096, where the lowest numbers are processed first. The security rules use 5-tuple information (source address, source port, destination address, destination port, and protocol) to allow or deny the traffic. When the traffic is evaluated, a flow record is created for existing connections, and the communication is allowed or denied based on the connection state of the flow record. You can compare this type of configuration to the old VLAN segmentation that was often implemented with on-premises networks.

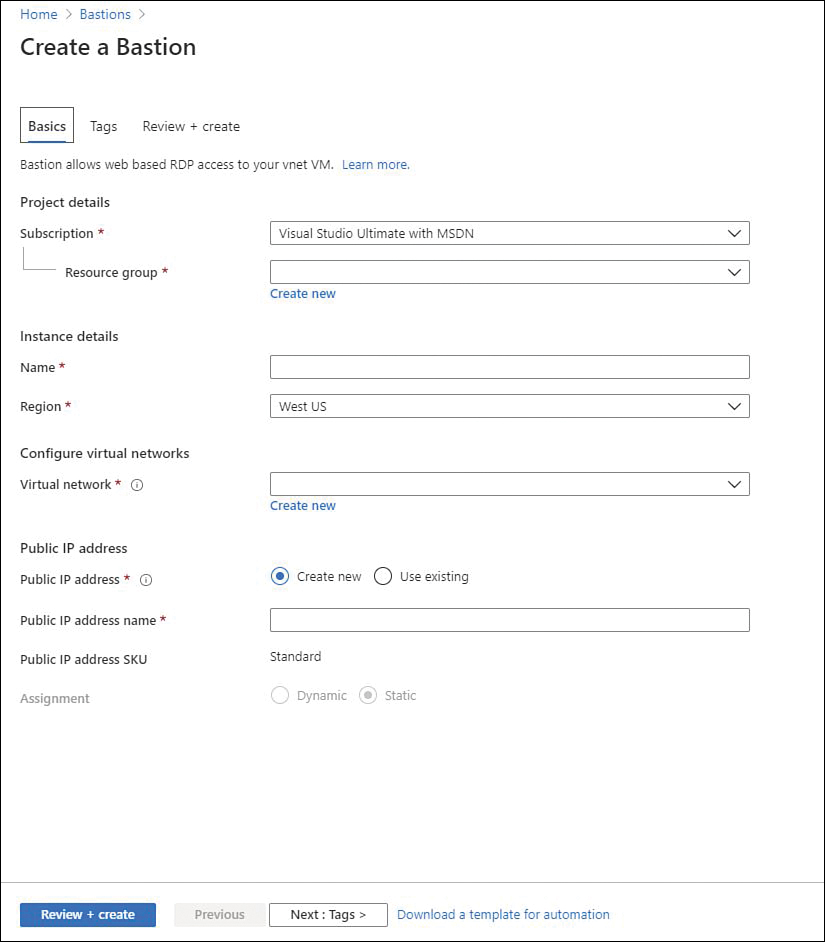

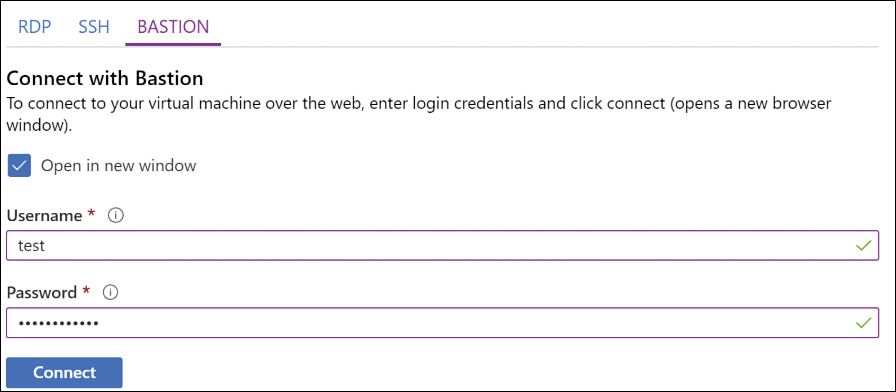

Contoso is headquartered in Dallas, and it has a branch office in Sydney. Contoso needs to provide secure and seamless RDP/SSH connectivity to its virtual machines directly from the Azure portal over TLS. Contoso doesn’t want to use jumpbox VMs and instead wants to allow remote access to back-end subnets through the browser. For this reason, Contoso implemented Azure Bastion, as you can see in the VNet C, subnet C1 in Figure 2-1.

Azure Bastion is a platform-managed PaaS service that can be provisioned in a VNet.

For Contoso’s connectivity with Sydney’s branch office, it is using a VPN gateway in Azure. A virtual network gateway in Azure is composed of two or more VMs that are deployed to a specific subnet called a gateway subnet. The VMs that are part of the virtual network gateway contain routing tables and run specific gateway services. These VMs are automatically created when you create the virtual network gateway, and you don’t have direct access to those VMs to make custom configurations to the operating system.

When planning your VNets, consider that each VNet may only have one virtual network gateway of each type, and the gateway type may only be VPN or ExpressRoute. Use VPN when you need to send encrypted traffic across the public Internet to your on-premises resources.

For example, let’s say that Contoso needs a faster, more reliable, secure, and consistent latency to connect its Azure network to its headquarters in Dallas. Contoso decides to use ExpressRoute, as shown in Figure 2-1. ExpressRoute allows Contoso to extend its on-premises networks into the Microsoft cloud (Azure or Office 365) over a private connection because ExpressRoute does not go over the public Internet.

In Figure 2-1, notice that the ExpressRoute circuit consists of two connections, both of which are Microsoft Enterprise Edge Routers (MSEEs) at an ExpressRoute Location from the connectivity provider or your network edge. While you might choose not to deploy redundant devices or Ethernet circuits at your end, the connectivity providers use redundant devices to ensure that your connections are handed off to Microsoft in a redundant manner. This Layer 3 connectivity redundancy is a requirement for Microsoft SLA to be valid.

Network segmentation is important in many scenarios, and you need to understand the design requirements to suggest the implementation options. Let’s say you want to ensure that Internet hosts cannot communicate with hosts on a back-end subnet but can communicate with hosts on the front-end subnet. In this case, you should create two VNets: one for your front-end resources and another for your back-end resources.

When configuring your virtual network, also take into consideration that the resources you deploy within the virtual network will inherit the capability to communicate with each other. You can also enable virtual networks to connect to each other, or you can enable resources in either virtual network to communicate with each other by using virtual network peering. When connecting virtual networks, you can choose to access other VNets that are in the same or different Azure regions. Follow the steps below to configure your virtual network using the Azure portal:

Navigate to the Azure portal at https://portal.azure.com.

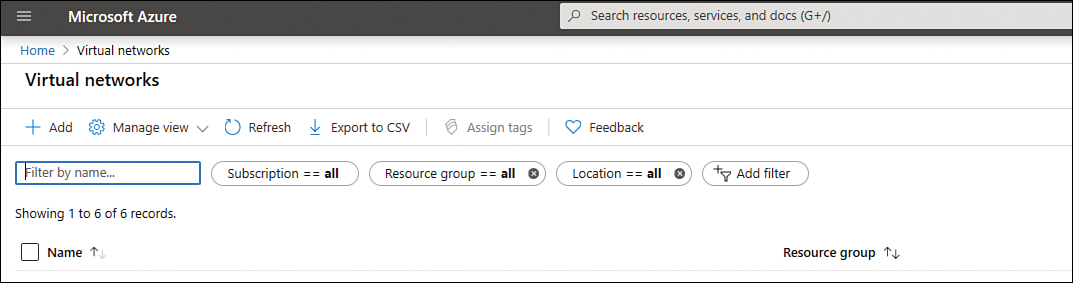

In the search bar, type virtual networks, and under Services, click Virtual Networks. The Virtual Networks page appears, as shown in Figure 2-2.

FIGURE 2-2 Azure Virtual Networks page

Click the Add button, and the Create Virtual Network page appears, as shown in Figure 2-3.

On the Basics tab, select the Subscription for the VNet and the Resource Group.

FIGURE 2-3 The Create Virtual Network page allows you to customize your VNet deployment

In the Name field, type a comprehensive name for the VNet, and in the Region field, select the Azure region in which the VNet is going to reside. Finally, click the IP Addresses tab.

On the IP Addresses page, in the IPv4 field, type the address space in classless inter-domain routing (CIRD) format; for example, you could enter 10.3.0.0/16.

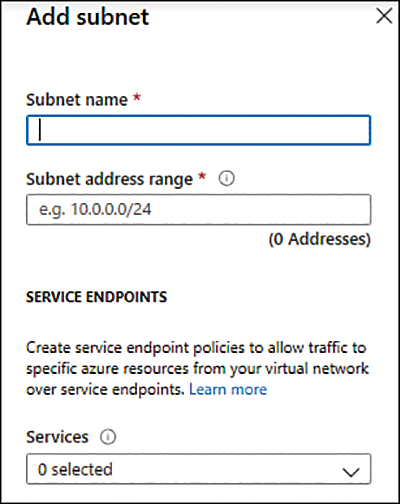

Click the Add Subnet button. The Add Subnet blade appears, as shown in Figure 2-4.

FIGURE 2-4 Add Subnet blade

In the Subnet Name field, type a name for this subnet.

In the Subnet Address Range, type the IP range for this subnet in CIDR format, such as 10.3.0.0/16. Keep in mind that the smallest supported IPv4 subnet is /29, and the largest is /8.

Click the Add button; the subnet that you just created appears under the Subnet Name section.

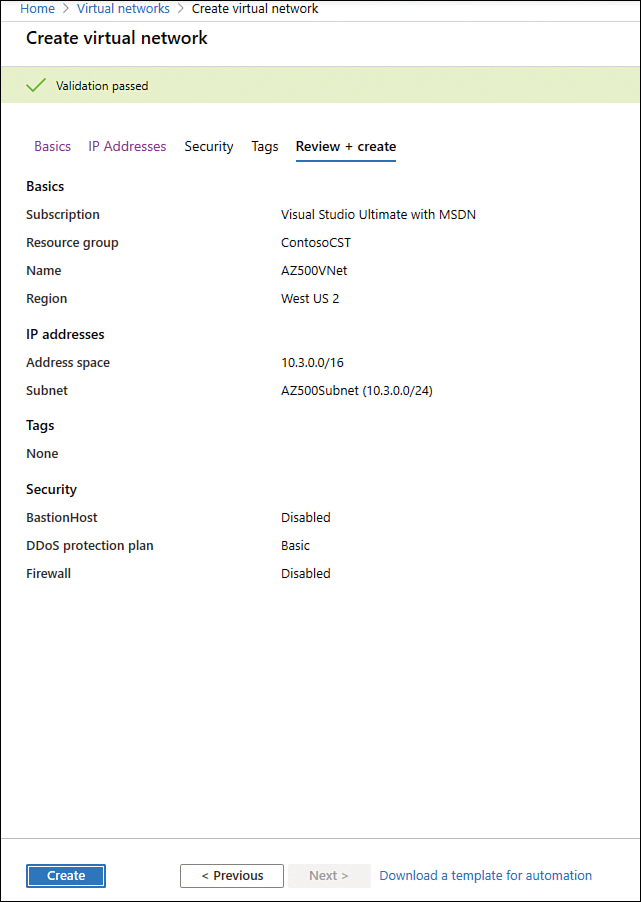

Leave the default selections for now and click the Review + Create button. The validation result appears, which is similar to the one shown in Figure 2-5.

FIGURE 2-5 Summary of the selections with the validation results

Click the Create button.

The Overview page appears with the

deployment finalstatus. On this page, click the Go To Resource button and review these options on the left navigation pane: Overview, Address Space, and Subnets.

Notice that the parameters you configured during the creation of your VNet will be distributed among the different options on the VNet page. As you saw in the previous steps, creating a VNet using the Azure portal is a straightforward process, though in some circumstances, you might need to automate the creation process, and you can use PowerShell to do just that.

When you are creating your virtual network, you can use any IP range that is part of RFC 1918, which includes

224.0.0.0/4(multicast)255.255.255.255/32(broadcast)127.0.0.0/8(loopback)169.254.0.0/16(link-local)168.63.129.16/32(internal DNS)

Also, consider the following points:

Azure reserves

x.x.x.0as a network address andx.x.x.1as a default gateway.x.x.x.2andx.x.x.3are mapped to the Azure DNS IPs to the VNet space.x.x.x.255is reserved for a network broadcast address.

To automate that, you can either use PowerShell on your client workstation (using Connect-AzAccount to connect to your Azure subscription) or by using Cloud Shell directly from https://shell.azure.com. To create a virtual network using PowerShell, you need to use the New-AzVirtualNetwork cmdlet, as shown here:

$AZ500Subnet = New-AzVirtualNetworkSubnetConfig -Name AZ500Subnet -AddressPrefix "10.3.0.0/24" New-AzVirtualNetwork -Name AZ500VirtualNetwork -ResourceGroupName ContosoCST -Location centralus -AddressPrefix "10.3.0.0/16" -Subnet $AZ500Subnet

In this example, you have the $AZ500Subnet variable, which configures a new subnet for this VNet using the New-AzVirtualNetworkSubnetConfig cmdlet. Next, the New-AzVirtualNetwork cmdlet is used to create the new VNet, and it calls the $AZ500Subnet variable at the end of the command line to create the subnet.

After creating your VNet, you can start connecting resources to it. In an IaaS scenario, it is very common to connect your virtual machines (VMs) to the VNet. Assuming you have Virtual Machine Contributor privileges in the subscription, you can quickly deploy a new VM using the New-AzVM PowerShell cmdlet, as shown here:

New-AzVm '

-ResourceGroupName "ContosoCST" '

-Location "East US" '

-VirtualNetworkName "AZ500VirtualNetwork" '

-SubnetName "AZ500Subnet" '

-Name "AZ500VM" '

Routing

In a physical network environment, you usually need to start configuring routes as soon as you expand your network to have multiple subnets. In Azure, the routing table is automatically created for each subnet within an Azure VNet. The default routes created by Azure and assigned to each subnet in a virtual network can’t be removed. The default route that is created contains an address prefix and the next hop (where the package should go). When traffic leaves the subnet, it goes to an IP address within the address prefix of a route; the route that contains the prefix is the route used by Azure.

When you create a VNet, Azure creates a route with an address prefix that corresponds to each address range that you defined within the address space of your VNet. If the VNet has multiple address ranges defined, Azure creates an individual route for each address range. You don’t need to worry about creating routes between subnets within the same VNet because Azure automatically routes traffic between subnets using the routes created for each address range. Also, differently from your physical network topology and routing mechanism, you don’t need to define gateways for Azure to route traffic between subnets. In an Azure routing table, this route appears as:

Source Default

Address prefix Unique to the virtual network

Next hop type Virtual network

If the destination of the traffic is the Internet, Azure leverages the system-default route 0.0.0.0/0 address prefix, which routes traffic for any address not specified by an address range within a virtual network to the Internet. The only exception to this rule is if the destination address is for one of Azure’s services. In this case, instead of routing the traffic to the Internet, Azure routes the traffic directly to the service over Azure’s backbone network. The other scenarios in which Azure will add routes are as follows:

When you create a VNet peering In this case, a route is added for each address range within the address space of each virtual network peering that you created.

When you add a Virtual Network Gateway In this case, one or more routes with a virtual network gateway listed as the next hop type are added.

When a VirtualNetworkServiceEndpoint is added When you enable a service endpoint to publish an Azure service to the Internet, the public IP addresses of the services are added to the route table by Azure.

You might also see None in the routing table’s Next Hop Type column. Traffic routed to this hop is automatically dropped. Azure automatically creates default routes for 10.0.0.0/8, 192.168.0.0/16 (RFC 1918), and 100.64.0.0/10 (RFC 6598).

At this point, you might ask: “If all these routes are created automatically, in which scenario should I create a custom route?” You should do this only when you need to alter the default routing behavior. For example, if you add an Azure Firewall or any other virtual appliance, you can change the default route (0.0.0.0/0) to point to this virtual appliance. This will enable the appliance to inspect the traffic and determine whether to forward or drop the traffic. Another example is when you want to ensure that traffic from hosts doesn’t go to the Internet; you can control the routing rules to accomplish that.

To create a custom route that is effective for your needs, you need to create a custom routing table, create a custom route, and associate the routing table to a subnet, as shown in the PowerShell sequence that follows.

Create the routing table using

New-AzRouteTablecmdlet, as shown here:$routeTableAZ500 = New-AzRouteTable ' -Name 'AZ500RouteTable' ' -ResourceGroupName ContosoCST ' -location EastUS

Create the custom route using multiple cmdlets. First, you retrieve the route table information using

Get-AzRouteTable, and then you create the route usingAdd-AzRouteConfig. Lastly, you use theSet-AzRouteTableto write the routing configuration to the route table:Get-AzRouteTable ' -ResourceGroupName "ContosoCST" ' -Name "AZ500RouteTable" ' | Add-AzRouteConfig ' -Name "ToAZ500Subnet" ' -AddressPrefix 10.0.1.0/24 ' -NextHopType "MyVirtualAppliance" ' -NextHopIpAddress 10.0.2.4 ' | Set-AzRouteTable

Now that you have the routing table and the custom route, you can associate the route table with the subnet. Notice here that you first write the subnet configuration to the VNet using the

Set-AzVirtualNetworkcmd. After that, you useSet-AzVirtualNetworkSubnetConfigto associate the route table to the subnet:$virtualNetwork | Set-AzVirtualNetwork Set-AzVirtualNetworkSubnetConfig ' -VirtualNetwork $virtualNetwork ' -Name 'CustomAZ500Subnet' ' -AddressPrefix 10.0.0.0/24 ' -RouteTable $routeTableAZ500 | ' Set-AzVirtualNetwork

Virtual network peering

When you have multiple VNets in your Azure infrastructure, you can connect those VNets using VNet peering. You can use VNet peering to connect VNets within the same Azure region or across Azure regions; doing so is called global VNet peering.

When the VNets are in the same region, the network latency between VMs that are communicating through the VNet peering is the same as the latency within a single virtual network. It’s also important to mention that the traffic between VMs in peered virtual networks is not through a gateway or over the public Internet; instead, that traffic is routed directly through the Microsoft backbone infrastructure. To create a VNet peering using the Azure portal, follow these steps:

Navigate to the Azure portal at https://portal.azure.com.

In the search bar, type virtual networks, and under Services, click Virtual Networks.

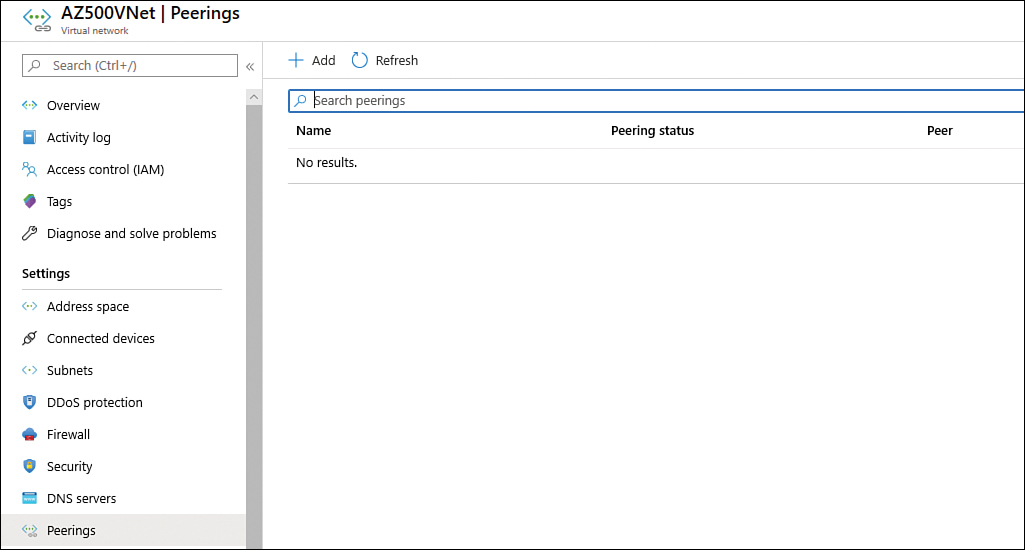

Click the VNet that you want to peer, and on the left navigation pane, click Peerings (see Figure 2-6).

FIGURE 2-6 Configuring VNet peering

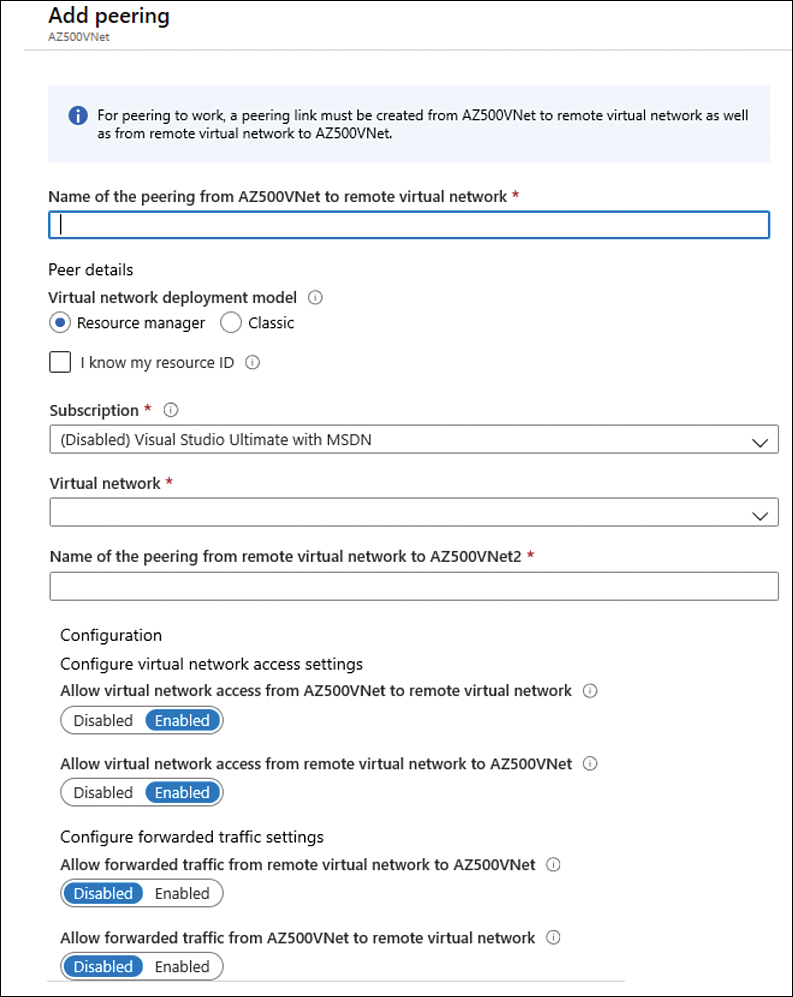

Click the Add button, and the Add Peering page appears, as shown in Figure 2-7.

In the Name field, type a name for this peering.

In the Subscription field, select the subscription that has the VNet to which you want to connect.

In the Virtual Network field, click the drop-down menu and select the VNet that you want to peer.

In the Name Of The Peering From Remote Virtual Network field, type the name that you want to appear for this peering connection on the other VNet.

The next two options—Allow Virtual Network Access From [VNet name] To Remote Virtual Network and Allow Virtual Network Access From Remote Virtual To [VNet name]—are used to control the communication between those VNets. If you want full connectivity from both directions, make sure to leave the Enabled option selected (default selection) for both. Enabling communication between virtual networks allows resources connected to either virtual network to communicate with each other with the same bandwidth and latency as if they were connected to the same virtual network.

FIGURE 2-7 Adding a new peering

The next two options—Allow Forwarded Traffic From Remote Virtual Network To [VNet name] and Allow Forwarded Traffic From [VNet name] To Remote Virtual Network—are related to allowing forwarded traffic. You should select Enable for both settings only when you need to allow traffic that didn’t originate from the VNet to be forwarded by a virtual network appliance through a peering. For example, consider three virtual networks named VNetTX, VNetWA, and MainHub. A peering exists between each spoke VNet (VNetTX and VNetWA) and the Hub virtual network, but peerings don’t exist between the spoke VNets. A network virtual appliance is deployed in the Hub VNet, and user-defined routes can be applied to each spoke VNet to route the traffic between the subnets through the network virtual appliance. If this option is disabled, there will be no traffic flow between the two spokes through the hub.

Click OK to finish the configuration.

To configure a VNet peering using PowerShell, you just need to use the Add-AzVirtual NetworkPeering cmdlet, as shown here:

Add-AzVirtualNetworkPeering -Name 'NameOfTheVNetPeering' -VirtualNetwork SourceVNet -RemoteVirtualNetworkId RemoteVNet

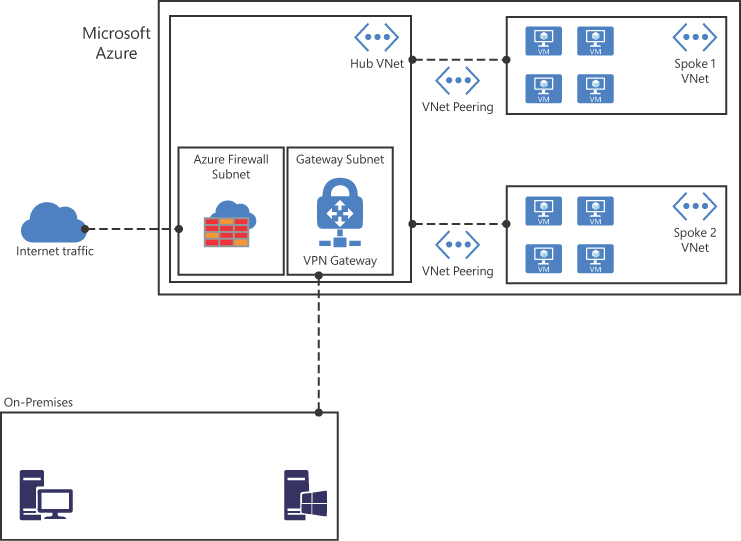

A peered VNet can have its own gateway, and the VNet can use its gateway to connect to an on-premises network. One common use of VNet peering is when you are building a hub-spoke network. In this type of topology, the hub is a VNet that acts as a central hub for connectivity to your on-premises network. The spokes are VNets that are peering with the hub, allowing them to be isolated, which increases their security boundaries. An example of this topology is shown in Figure 2-8.

FIGURE 2-8 Hub-spoke network topology using VNet peering

A hybrid network uses the hub-spoke architecture model to route traffic between Azure VNets and on-premises networks. When there is a site-to-site connection between the Azure VNet and the on-premises data center, you must define a gateway subnet in the Azure VNet. All the traffic from the on-premises data center would then flow via the gateway subnet.

Network address translation

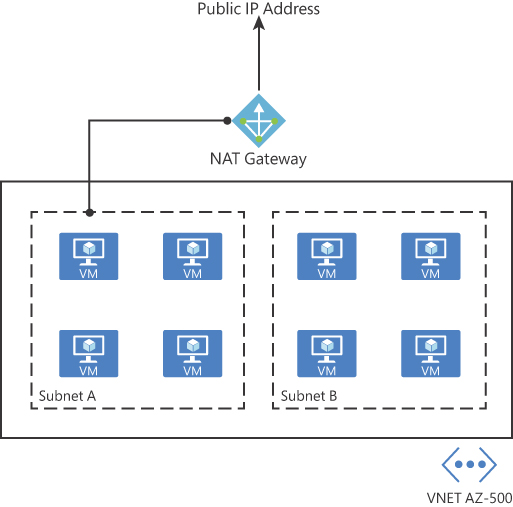

Azure has a Virtual Network NAT (network address translation) capability that enables outbound-only Internet connectivity for virtual networks. This is a common scenario when you want that outbound connectivity to use a specified static public IP address (static NAT), or you want to use a pool of public IP addresses (Dynamic NAT).

Keep in mind that outbound connectivity is possible without the use of an Azure load balancer or a public IP address directly attached to the VM. Figure 2-9 shows an example of the topology with a NAT Gateway.

You can implement NAT by using a public IP prefix directly, or you can distribute the public IP addresses of the prefix across multiple NAT gateway resources. NAT also changes the network route because it takes precedence over other outbound scenarios, and it will replace the default Internet destination of a subnet. From an availability standpoint (which is critical for security), NAT always has multiple fault domains, which means it can sustain multiple failures without service outage.

FIGURE 2-9 NAT Gateway topology

To create a NAT Gateway for your subnet, you first need to create a public IP address and a public IP prefix. Follow the steps below to perform these tasks:

Navigate to the Azure portal at https://portal.azure.com.

In the main dashboard, click the Create A Resource button.

On the New page, type Public IP and click the Public IP Address option that appears in the list.

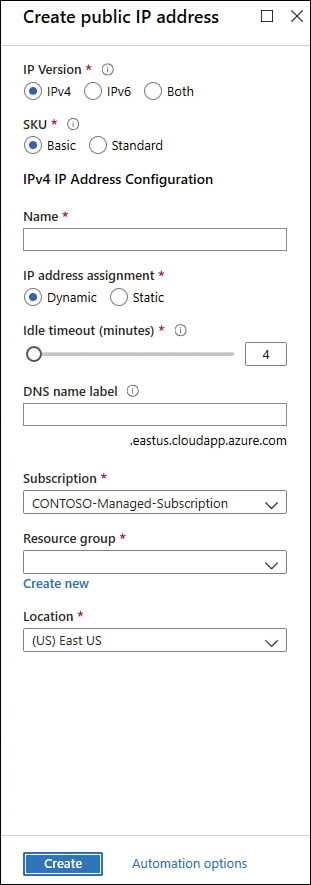

On the Public IP Address page, click the Create button; the Create Public IP Address page appears, as shown in Figure 2-10.

FIGURE 2-10 Creating a public IP address to be used by NAT Gateway

Type the name for this public IP address and select the subscription, resource group, and the Azure location. For this example, you can leave all other options with their default selections. Once you finish, click the Create button.

Now you should repeat steps 1 and 2. In the third step, type public IP prefix and click the Public IP Prefix option that appears in the drop-down menu.

On the Create A Public IP Prefix page, configure the following relevant options:

Select the appropriate Subscription.

Select the appropriate Resource Group.

Type the Prefix Name.

Select the appropriate Azure Region.

In the Prefix Size drop-down menu, select the appropriate size for your deployment.

Once you finish configuring these options, click the Review + Create button and click Create to finish.

Now that you have the two requirements fulfilled, you can create the NAT Gateway.

Navigate to the Azure portal at https://portal.azure.com.

In the main dashboard, click the Create A Resource button.

On the New page, type NAT Gateway and click the NAT Gateway option in the list.

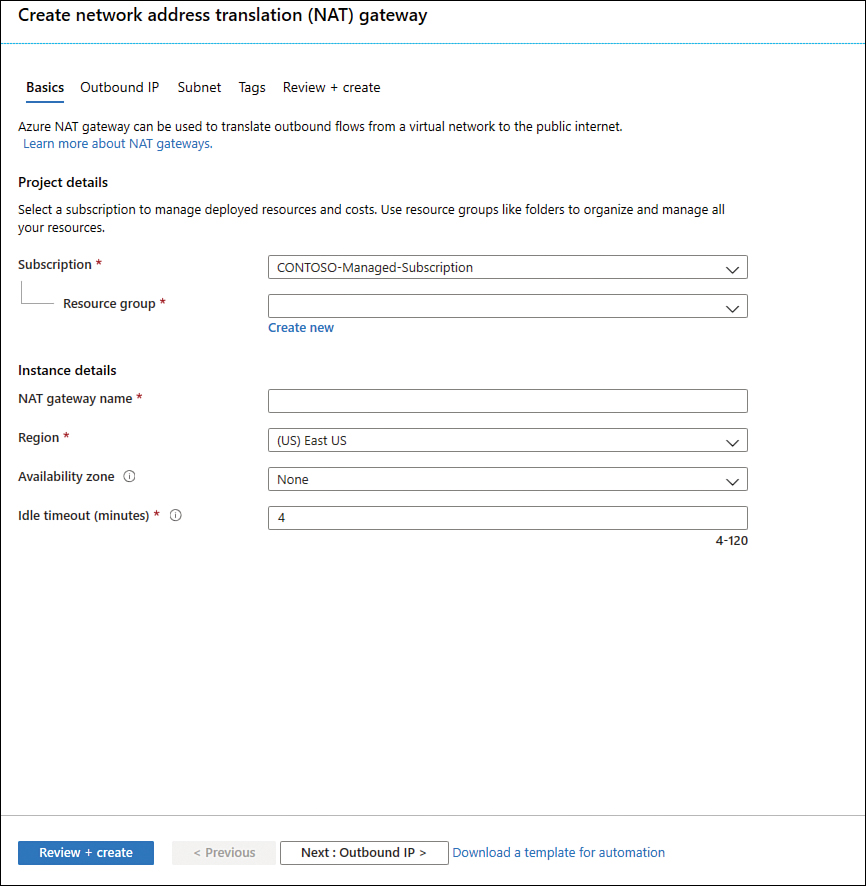

On the NAT Gateway page, click Create. The Create Network Address Translation (NAT) Gateway page appears, as shown in Figure 2-11.

On the Basics tab, make sure to configure the following options:

Select the appropriate Subscription and Resource Group.

Type the NAT Gateway Name.

Select the appropriate Azure Region and Availability Zone.

Move to the next tab, Outbound IP, and select the Public IP Address and Prefix Name that you created previously.

Next, on the Subnet tab, you will configure which subnets of a VNet should use this NAT gateway.

The Tags tab is optional, and you should use it only when you need to logically organize your resources in a particular taxonomy to easily identify them later.

You can review a summary of the selections in the Review + Create tab. Once you finish reviewing it, click the Create button.

You can also use the New-AzNatGateway cmdlet to create a NAT Gateway using PowerShell, as shown:

New-AzNatGateway -ResourceGroupName "AZ500RG" -Name "nat_gt" -IdleTimeoutInMinutes 4 -Sku "Standard" -Location "eastus2" -PublicIpAddress PublicIPAddressName

FIGURE 2-11 Creating a NAT Gateway in Azure

Secure the connectivity of hybrid networks

With organizations migrating to the cloud, virtual private networks (VPNs) are constantly used to establish a secure communication link between on-premises and cloud network infrastructure. Many organizations will also keep part of their resources on-premises while taking advantage of cloud computing to host different services, which creates a hybrid environment. While this is one common scenario, there are many other scenarios where a VPN can be used. You can use Azure VPN to connect two different Azure regions or subscriptions.

Azure natively offers a service called VPN gateway, which is a specific type of virtual network gateway that is used to send encrypted traffic between an Azure virtual network and on-premises resources. You can also use a VPN gateway to send encrypted traffic between Azure virtual networks. When planning your VPN Gateway implementation, be aware that each virtual network can have only one VPN gateway, and you can create multiple connections to the same VPN gateway. When deploying a hybrid network that needs to create a cross-premises connection, you can select from different types of VPN connectivity. The available options are:

Point-to-Site (P2S) VPN This type of VPN is used in scenarios where you need to connect to your Azure VNet from a remote location. For example, you would use P2S when you are working remotely (hotel, home, conference, and the like), and you need to access resources in your VNet. This VPN uses SSTP (Secure Socket Tunneling Protocol) or IKE v2 and does not require a VPN device.

Site-to-Site (S2S) VPN This type of VPN is used in scenarios where you need to connect on-premises resources to Azure. The encrypted connection tunnel uses IPsec/IKE (IKEv1 or IKEv2).

VNet-to-VNet As the name states, this VPN is used in scenarios where you need to encrypt connectivity between VNets. This type of connection uses IPsec (IKE v1 and IKE v2).

Multi-Site VPN This type of VPN is used in scenarios where you need to expand your site-to-site configuration to allow multiple on-premises sites to access a virtual network.

ExpressRoute is another option that allows connectivity from your on-premises resources to Azure. This option uses a private connection to Azure from your WAN, instead of a VPN connection over the Internet.

VPN authentication

The Azure VPN connection is authenticated when the tunnel is created. Azure generates a pre-shared key (PSK), which is used for authentication. This pre-shared key is an ASCII string character no longer than 128 characters. This authentication happens for policy-based (static routing) or routing-based VPN (dynamic routing). You can view and update the pre-shared key for a connection with these PowerShell cmdlets:

Get-AzVirtualNetworkGatewayConnectionSharedKey This command is used to show the pre-shared key.

Set-AzVirtualNetworkGatewayConnectionSharedKey This command is used to change the pre-shared key to another value.

For point-to-site (P2S) VPN scenarios, you can use native Azure certificate authentication, RADIUS server, or Azure AD authentication. For native Azure certificate authentication, a client certificate is presented on the device, which is used to authenticate the users who are connecting. The certificate can be one that was issued by an enterprise certificate authority (CA), or it can be a self-signed root certificate. For native Azure AD, you can use the native Azure AD credentials. Keep in mind that native Azure AD is only supported for the OpenVPN protocol and Windows 10 (Windows 10 requires the use of the Azure VPN Client).

If your scenario requires the enforcement of a second factor of authentication before access to the resource is granted, you can use Azure Multi-Factor Authentication (MFA) with conditional access. Even if you don’t want to implement MFA across your entire company, you can scope the MFA to be employed only for VPN users using conditional access capability.

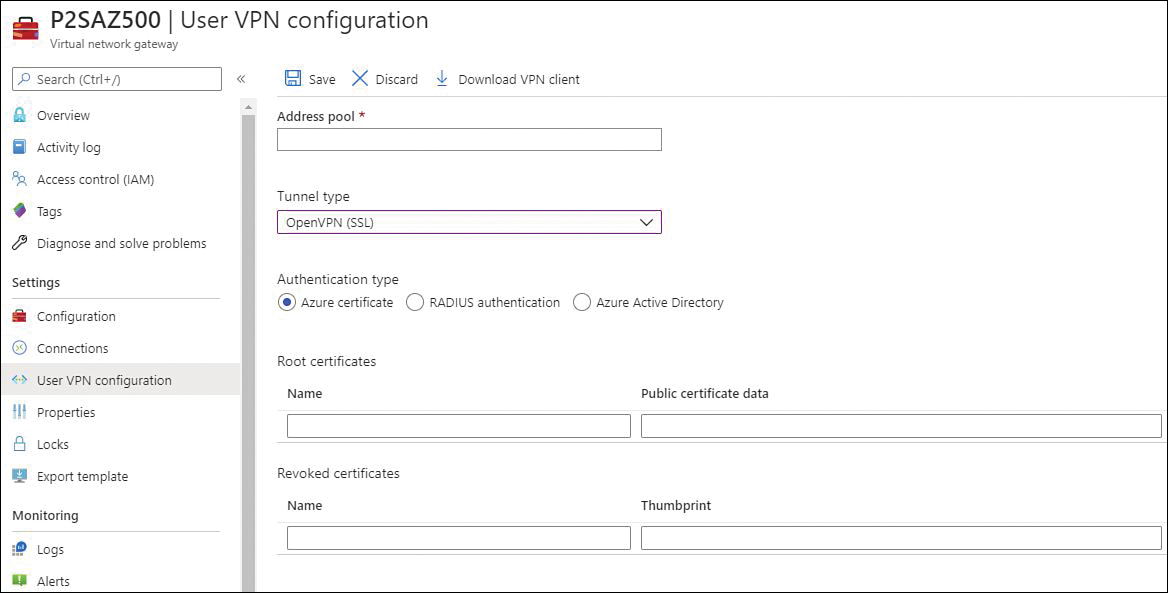

Another option available for P2S is the authentication using RADIUS (which also supports IKEv2 and SSTP VPN). Keep in mind that RADIUS is only supported for VpnGw1, VpnGw2, and VpnGw3 SKUs. For more information about the latest VPN SKUs, visit http://aka.ms/az500vpnsku. Figure 2-12 shows an example of the options that appear when you are configuring a P2S VPN, and you need to select the authentication type.

FIGURE 2-12 Authentication options for VPN

The options that appear right under the Authentication Type section will vary according to the Authentication Type you select. In Figure 2-12, Azure Certificate is chosen, and the page shows options to enter the Name and Public Certification Data for the Root Certificates and the Name and Thumbprint for the Revoked Certificates. If you select RADIUS authentication, you will need to specify the Server IP Address and the Server Secret. Lastly, if you select the Azure Active Directory option, you will need to specify the Tenant’s URL; the Audience (which identifies the recipient resource the token is intended for); and the Issuer (which identifies the Security Token Service (STS) that issued the token). Lastly, choose the Azure AD tenant.

Your particular scenario will dictate which option to use. For example, Contoso’s IT department needs to implement a VPN solution that can integrate with a certificate authentication infrastructure that it already has through RADIUS. In this case, you should use RADIUS certificate authentication. When using the RADIUS certificate authentication, the authentication request is forwarded to a RADIUS server, which handles the certificate validation. If the scenario requires that the Azure VPN gateway perform the certificate authentication, the right option would be to use the Azure native certificate authentication.

ExpressRoute encryption

If your connectivity scenario requires a higher level of reliability, faster speeds, consistent latencies, and higher security than typical connections over the Internet, you should use ExpressRoute, which provides layer 3 connectivity between your on-premises network and the Microsoft Cloud.

ExpressRoute supports two different encryption technologies to ensure the confidentiality and integrity of the data that is traversing from on-premises to Microsoft’s network. The options are

Point-to-point encryption by MACsec

End-to-end encryption by IPsec

MACsec encrypts the data at the media access control (MAC) level or at network layer 2. When you enable MACsec, all network control traffic is encrypted, which includes the border gateway protocol (BGP) data traffic and your (customer) data traffic. This means that you can’t encrypt only some of your ExpressRoute circuits.

If you need to encrypt the physical links between your network devices and Microsoft’s network devices when you connect to Microsoft via ExpressRoute Direct, MACsec is preferred. MACsec also allows you to bring your own MACsec key for encryption and store it in Azure Key Vault. If this is the design choice, remember that you will need to decide when to rotate the key.

Keep in mind that when you update the MACsec key, the on-premises resources will temporally lose connectivity to Microsoft over ExpressRoute. This happens because MACsec configuration only supports pre-shared key mode, so you must update the key on both sides. In other words, if there is a mismatch, traffic flow won’t occur. Plan the correct maintenance window to reduce the impact on production environments.

The other option is to use end-to-end encryption with IPsec, which encrypts data at the Internet protocol (IP)–level or at the network layer 3. A very common scenario is to use IPsec to encrypt the end-to-end connection between on-premises resources and your Azure VNet. In a scenario where you need to encrypt layers 2 and 3, you can enable MACsec and IPsec.

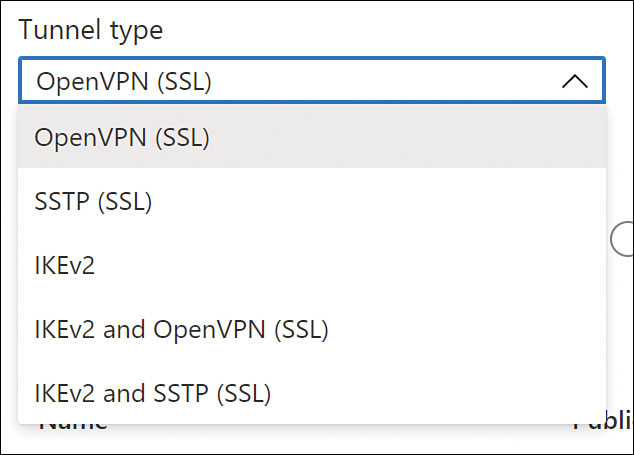

Point-to-site

To implement a point-to-site (P2S) VPN in Azure, you first need to decide what authentication method you will use based on the options that were presented earlier in this section. The authentication method will dictate how the P2S VPN will be configured. When configuring the P2S VPN, you will see the options available under Tunnel Type, as shown in Figure 2-13.

FIGURE 2-13 Different options for the VPN tunnel

Another important variable to select is the protocol that will be used. Use Table 2-1 to select the most-appropriate protocol based on the advantages and limitations:

TABLE 2-1 Advantages and limitations

Protocol |

Advantages |

Limitations |

OpenVPN Protocol |

This is a TLS VPN-based solution that can traverse most firewalls on the market. Can be used to connect from a variety of operating systems, including Android, iOS (versions 11.0 and above), Windows, Linux, and Mac devices (OSX versions 10.13 and above). |

Basic SKU is not supported. Not available for the classic deployment model. |

Secure Socket Tunneling Protocol (SSTP) |

Can traverse most firewalls because it uses TCP port 443. |

Only supported on Windows devices. Supports up to 128 concurrent connections, regardless of the gateway SKU. |

IKEv2 |

Standard-based IPsec VPN solution. Can be used to connect to Mac devices (OSX versions 10.11 and above). |

Basic SKU is not supported. Not available for the classic deployment model. Uses nonstandard UDP ports, so you need to ensure that these ports are not blocked on the user’s firewall. The ports in use are UDP 500 and 4500. |

Site-to-site

A site-to-site (S2S) VPN is used in most scenarios to allow the communication from one location (on-premises) to another (Azure) over the Internet. To configure an S2S, you need the following prerequisites fulfilled before you start:

An on-premises VPN device that is compatible with Azure VPN policy–based configuration or route-based configuration. See the full list at https://aka.ms/az500s2sdevices.

Externally facing public IPv4 address.

IP address range from your on-premises network that will be utilized to allow Azure to route to your on-premises location.

Secure connectivity of virtual networks

Network security groups (NSG) in Azure allow you to filter network traffic by creating rules that allow or deny inbound network traffic to or outbound network traffic from different types of resources. You can think of an NSG as a Virtual LAN or VLAN in a physical network infrastructure. For example, you could configure an NSG to block inbound traffic from the Internet to a specific subnet that only allows traffic from a network virtual appliance (NVA).

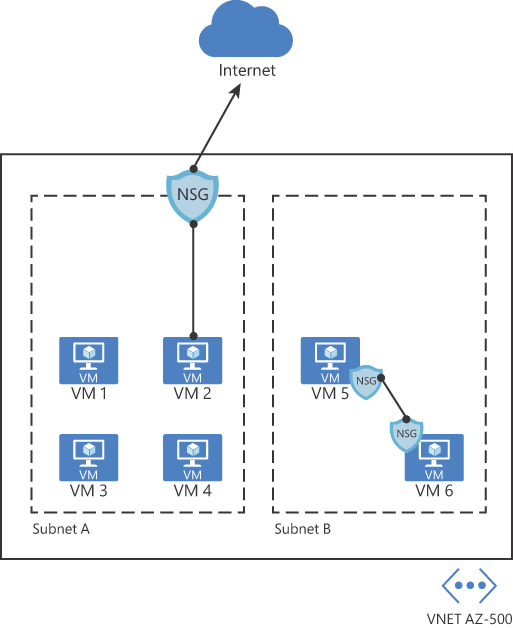

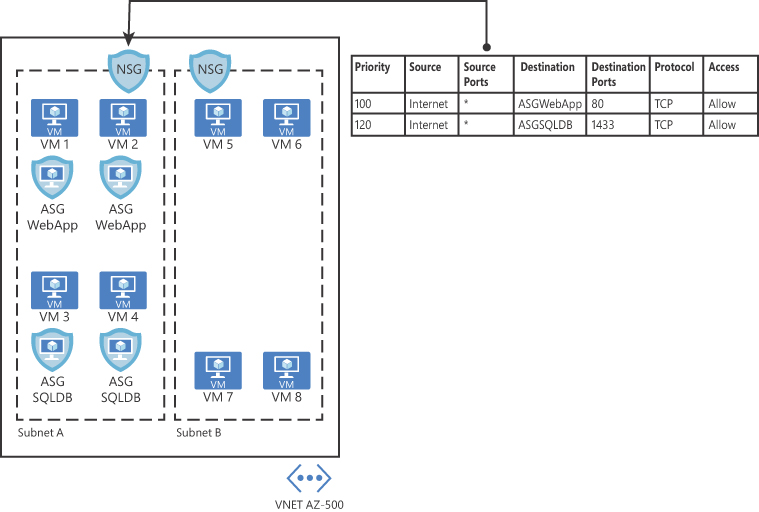

Network security groups can be enabled on the subnet or to the network interface in the VM, as shown in Figure 2-14.

In the diagram shown in Figure 2-14, you have two different uses of NSG. In the first case, the NSG is assigned to the subnet A. This can be a good way to secure the entire subnet with a single set of NSG rules. However, there will be scenarios where you might need to control the NSG on the network interface level, which is the case of the second scenario (subnet B), where VM 5 and VM 6 have an NSG assigned to the network interface.

When inbound traffic is coming through the VNet, Azure processes the NSG rules that are associated with the subnet first—if there are any—and then it processes the NSG rules that are associated with the network interface. When the traffic is leaving the VNet (outbound traffic), Azure processes the NSG rules associated with the network interface first, followed by the NSG rules associated with the subnet.

FIGURE 2-14 Different NSG implementations

When you create an NSG, you need to configure a set of rules to harden the traffic. These rules use the following parameters:

Name The name of the rule.

Priority The order in which the rule will be processed. Lower numbers have high priority, which means that a rule priority 100 will be evaluated before rule priority 300. Once the traffic matches the rule, it will stop moving forward to evaluate other rules. When configuring the priority, you can assign a number between 100 and 4096.

Source Define the source IP, CIDR Block, Service Tag, or Application Security Group.

Destination Define the destination IP, CIDR Block, Service Tag, or Application Security Group.

Protocol Define the TCP/IP protocol that will be used, which can be set to TCP, UDP, ICMP, or Any.

Port Range Define the port range or a single port.

Action This determines the action that will be taken once this rule is processed. This can be set to Allow or Deny.

Before creating a new NSG and adding new rules, it is important to know that Azure automatically creates default rules on NSG deployments. Following is a list of the inbound rules that are created:

AllowVNetInBound

Priority 65000

Source VirtualNetwork

Source Ports 0–65535

Destination VirtualNetwork

Destination Ports 0–65535

Protocol Any

Access Allow

AllowAzureLoadBalancerInBound

Priority 65001

Source AzureLoadBalancer

Source Ports 0–65535

Destination 0.0.0.0/0

Destination Ports 0–65535

Protocol Any

Access Allow

DenyAllInbound

Priority 65500

Source 0.0.0.0/0

Source Ports 0–65535

Destination 0.0.0.0/0

Destination Ports 0–65535

Protocol Any

Access Deny

Below is a list of outbound rules that are created:

AllowVNetOutBound

Priority 65000

Source VirtualNetwork

Source Ports 0–65535

Destination VirtualNetwork

Destination Ports 0–65535

Protocol Any

Access Allow

AllowInternetOutBound

Priority 65001

Source 0.0.0.0/0

Source Ports 0–65535

Destination Internet

Destination Ports 0–65535

Protocol Any

Access Allow

DenyAllOutBound

Priority 65500

Source 0.0.0.0/0

Source Ports 0–65535

Destination 0.0.0.0/0

Destination Ports 0–65535

Protocol Any

Access Deny

Follow the steps below to create and configure an NSG, which in this example will be associated with a subnet:

Navigate to the Azure portal by opening https://portal.azure.com.

In the search bar, type network security, and under Services, click Network Security Groups; the Network Security Groups page appears.

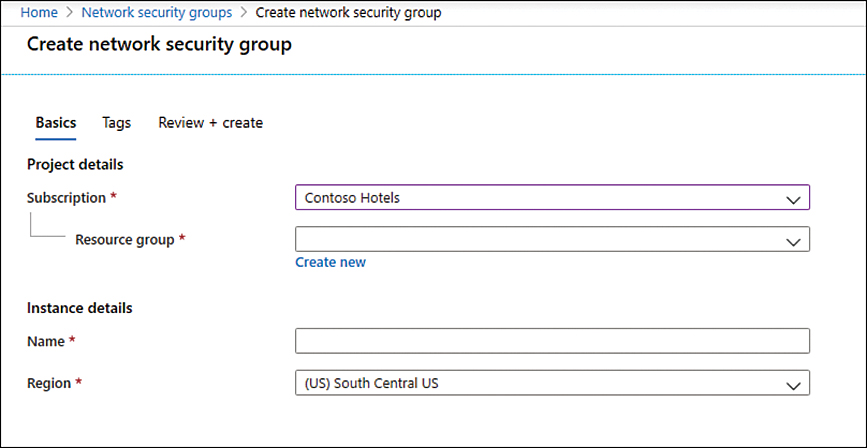

Click the Add button; the Create Network Security Group page appears, as shown in Figure 2-15.

In the Subscription field, select the subscription where this NSG will reside.

In the Resource Group field, select the resource group in which this NSG will reside.

In the Name field, type the name for this NSG.

In the Region field, select the Azure region in which this NSG will reside.

Click the Review + Create button, review the options, and click the Create button.

Once the deployment is complete, click the Go To Resource button. The NSG page appears.

FIGURE 2-15 Initial parameters of the network security group

At this point, you have successfully created your NSG, and you can see that the default rules are already part of it. The next step is to create the custom rules, which can be inbound or outbound. (This example uses inbound rules.) The same operation could be done using the New-AzNetworkSecurityGroup PowerShell cmdlet, as shown in the following example:

New-AzNetworkSecurityGroup -Name "AZ500NSG" -ResourceGroupName "AZ500RG" -Location "westus"

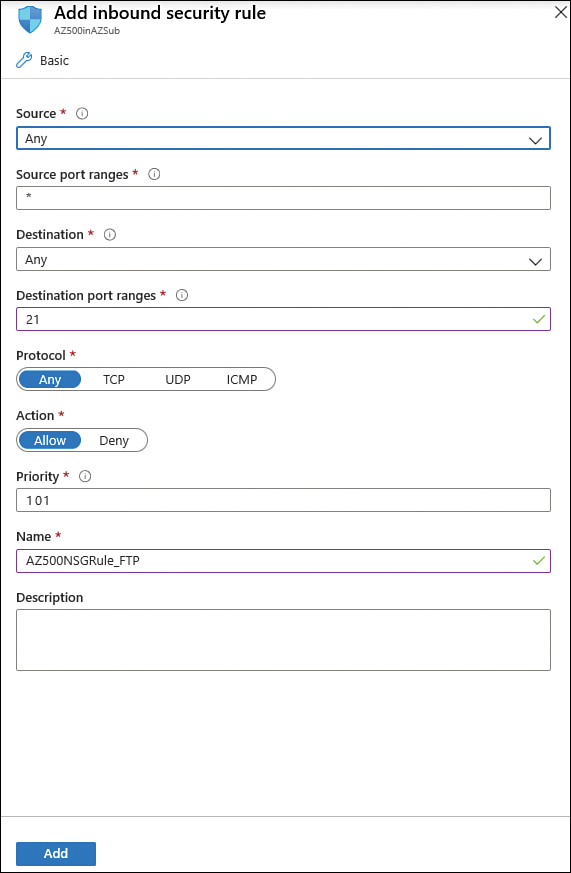

Follow these steps to create an inbound rule that allows FTP traffic from any source to a specific server using Azure portal:

On the NSG page, under Settings in the left navigation pane, click Inbound Security Rules.

Click the Add button; the Add Inbound Security Rule blade appears, as shown in Figure 2-16.

On this blade, you start by specifying the source, which can be an IP address, a service tag, or an ASG. If you leave the default option (Any), you are allowing any source. For this example, leave this set to Any.

In the Source Port Ranges field, you can harden the source port. You can specify a single port or an interval. For example, you can allow traffic from ports 50 to 100. Also, you can use a comma to add another condition to the range, such as

50–100, 135, which specifies ports 50 through 100 and 135. Leave the default selection (*), which allows any source port.In the Destination field, the options are nearly the same as the Source field. The only difference is that you can select the VNet as the destination. For this example, change this option to IP Addresses and enter the internal IP address of the VM that you created at the beginning of this chapter.

In the Destination Port Ranges field, specify the destination port that will be allowed. The default port is 8080; for this example, change it to 21.

FIGURE 2-16 Creating an inbound security rule for your NSG

In the Protocol field, you can select which protocol you are going to allow; in this case, change it to TCP.

Leave the Action field set to Allow, which is the default selection.

You can also change the Priority of this rule. Remember that the lowest priority is evaluated first. For this example, change it to 101.

In the Name field, change it to AZ500NSGRule_FTP and click the Add button.

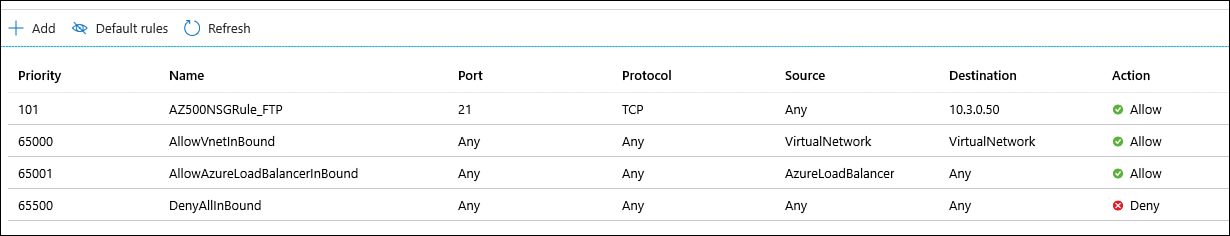

The NSG will be created, and a new rule will be added to the inbound rules. At this point, your inbound rules should look like the rules shown in Figure 2-17.

FIGURE 2-17 List of inbound rules

While these are the steps to create the inbound rule, this NSG has no use if it is not associated with a subnet or a virtual network interface. For this example, you will associate this NSG to a subnet. The intent is to block all traffic to this subnet and only allow FTP traffic to this specific server. Use the following steps to create this association:

At the left hand side of the NSG Inbound Security Rules page, in the navigation pane of the Network security group, under Settings, click Subnets.

Click the Associate button, and in the Virtual Network drop-down menu, select the VNet where the subnet resides.

After this selection, you will see that the Subnet drop-down menu appears; select the subnet and click the OK button.

You could also use PowerShell to create an NSG and then associate the NSG to a subnet. To create an NSG using PowerShell, use the New-AzNetworkSecurityRuleConfig cmdlet, as shown in the following example:

$MyRule1 = New-AzNetworkSecurityRuleConfig -Name ftp-rule -Description "Allow FTP" -Access Allow -Protocol Tcp -Direction Inbound -Priority 100 -SourceAddressPrefix * -SourcePortRange * -DestinationAddressPrefix * -DestinationPortRange 21

Application security group

If you need to define granular network security policies based on workloads that are centralized on application patterns instead of explicit IP addresses, you need to use the application security group (ASG). An ASG allows you to group VMs and secure applications by filtering traffic from trusted segments of your network, which adds an extra level of micro-segmentation.

You can deploy multiple applications within the same subnet and isolate traffic based on ASGs. Another advantage is that you can reduce the number of NSGs in your subscription. For example, in some scenarios, you can use a single NSG for multiple subnets of your virtual network and perform the micro-segmentation on the application level by using ASG. Figure 2-18 shows an example of how ASG can be used in conjunction with NSG.

In the example shown in Figure 2-18, two ASGs have been created to define the application pattern for a web application and another ASG to define the application pattern for a SQL database. Two VMs are part of each group, and the ASG is used in the routing table of the NSG located in subnet A. In the NSG routing table, you can specify one ASG as the source and destination, but you cannot specify multiple ASGs in the source or destination.

FIGURE 2-18 ASG used as the destination in the NSG routing table

When you deploy VMs, you can make them members of the appropriate ASGs. In case your VM has multiple workloads (Web App and SQL, for example), you can assign multiple ASGs to each application. This will allow you to have different types of access to the same VM according to the workload. This approach also helps to implement a zero-trust model by limiting access to the application flows that are explicitly permitted. Follow these steps to create an ASG:

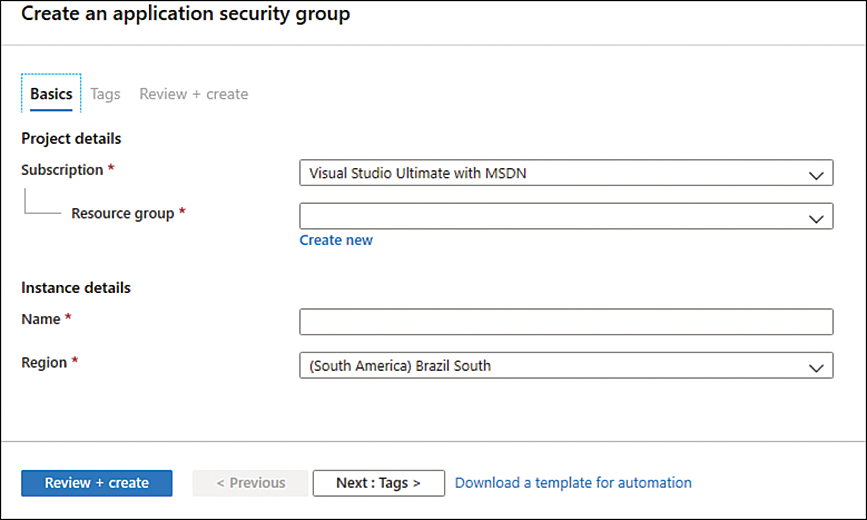

Navigate to the Azure portal at https://portal.azure.com.

In the search bar, type application security, and under Services, click Application Security Groups.

In the Application Security Groups dashboard, click the Add button, which makes the Create An Application Security Group page appear, as shown in Figure 2-19.

FIGURE 2-19 Create An Application Security Group

In the Subscription drop-down menu, select the appropriate subscription for this ASG.

In the Resource Group drop-down menu, select the resource group in which this ASG will reside.

In the Name field, type a name for this ASG.

In the Region drop-down menu, select the appropriate region for this ASG and click the Review + Create button.

On the Review + Create button page, click the Create button.

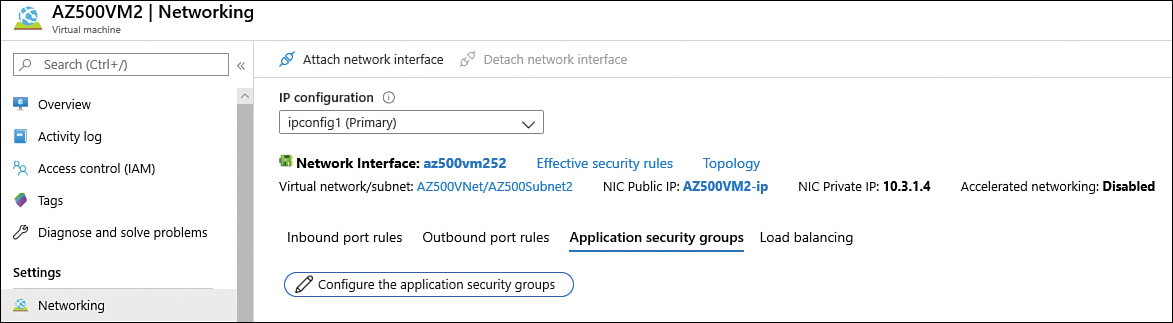

Now that the ASG is created, you need to associate this ASG to the network interface of the VM that has the workload you want to control. Follow these steps to perform this association:

Navigate to the Azure portal at https://portal.azure.com.

In the search bar, type virtual, and under Services, click Virtual Machines.

Click in the VM that you want to perform this association.

On the VM’s page, in the Settings section, click the Networking option.

Click the Application Security Group tab, and the page shown in Figure 2-20 appears.

FIGURE 2-20 Associating the ASG to the virtual network interface card

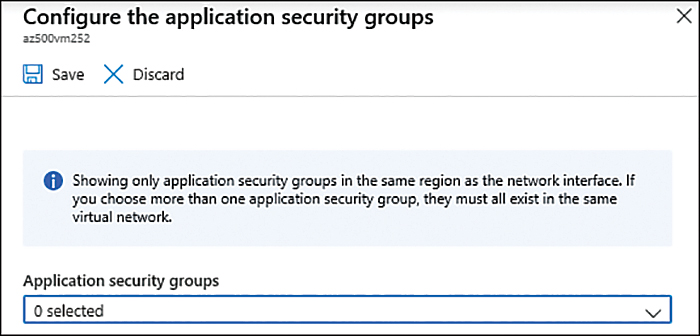

Click the Configure The Application Security Groups button, and the Configure The Application Security Groups blade appears, as shown in Figure 2-21.

FIGURE 2-21 Selecting the ASG

Select the appropriate ASG and click the Save button.

You can also use the New-AzApplicationSecurityGroup cmdlet to create a new ASG, as shown in the following example:

New-AzApplicationSecurityGroup -ResourceGroupName "MyRG" -Name "MyASG" -Location "West US"

Now when you create your new NSG rule for inbound or outbound traffic, you can select the ASG as source or destination.

Create and configure Azure Firewall

While NSG provides stateful package flow and custom security rules, you will need a more robust solution when you need to protect an entire virtual network. If your company needs a fully stateful, centralized network firewall as a service (FWaaS) that provides network and application-level protection across different subscriptions and virtual networks, you should choose Azure Firewall.

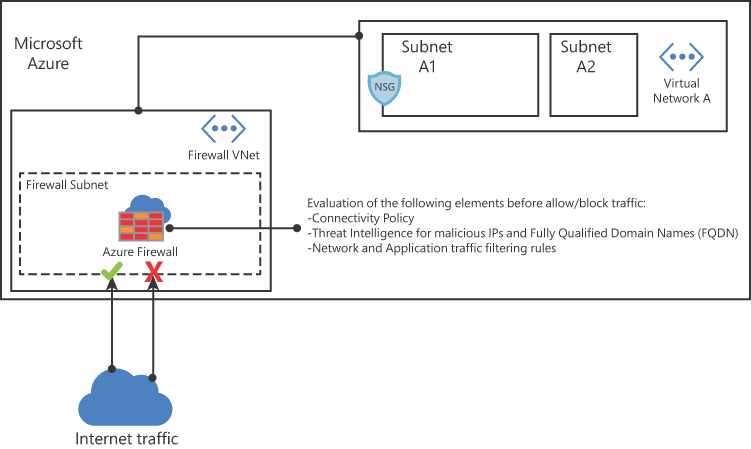

Also, Azure Firewall can be used in scenarios where you need to span multiple availability zones for increased availability. Although there’s no additional cost for an Azure Firewall deployed in an availability zone, there are additional costs for inbound and outbound data transfers associated with Availability Zones. Figure 2-22 shows an Azure Firewall in its own VNet and subnet, allowing some traffic and blocking other traffic based on a series of evaluations.

FIGURE 2-22 Azure Firewall topology

As shown in Figure 2-22, the Azure Firewall will perform a series of evaluations prior to allowing or blocking the traffic. Just as with an NSG, the rules in Azure Firewall are processed according to the rule type in priority order (lower numbers to higher numbers). A rule collection name may contain only letters, numbers, underscores, periods, or hyphens. You can configure NAT rules, network rules, and applications rules on Azure Firewall. Keep in mind that Azure Firewall uses a static public IP address for your virtual network resources, and you need that before deploying your firewall. Azure Firewall also supports learning routes via Border Gateway Protocol (BGP).

To evaluate outbound traffic, Azure Firewall will query the network and application rules. Just as with an NSG, no other rules are processed when a match is found in a network rule. Azure Firewall will use the infrastructure rule collection if there is no match. This collection is created automatically by Azure Firewall and includes platform-specific fully qualified domain names (FQDN). If there is still no match, Azure Firewall denies outgoing traffic.

Azure Firewall uses rules based on Destination Network Address Translation (DNAT) for incoming traffic evaluation. These rules are also evaluated in priority and before network rules. An implicit corresponding network rule to allow the translated traffic is added if a match is found. Although this is the default behavior, you can override this by explicitly adding a network rule collection with deny rules that match the translated traffic (if needed).

In Figure 2-22, you also saw that Azure Firewall leverages Microsoft Threat Intelligence during the traffic evaluation. The Microsoft Threat Intelligence is powered by Intelligent Security Graph and is used by many other services in Azure, including Microsoft Defender for Cloud.

Azure Firewall is available in two tiers, Premium and Standard. The Standard tier includes the following capabilities:

Built-in high availability

Availability Zones

Unrestricted cloud scalability

Application FQDN filtering rules

Network traffic filtering rules

FQDN tags

Service tags

Threat intelligence

Outbound SNAT support

Inbound DNAT support

Multiple public IP addresses

Azure Monitor logging

Forced tunneling

Web categories

Certifications

While these features are enough for many organizations, there will be scenarios where the environment is highly sensitive and regulated, which requires features only available in the next generation Firewall. These features are part of the Azure Firewall Premium tier, which includes:

TLS inspection With this capability it is possible to decrypt outbound traffic, analyze the data, and then encrypt the data again before sending it to the destination.

Intrusion detection and prevention system (IDPS) This is a network-based IDPS that enables you to monitor network traffic for malicious activity. In addition, IDPS enables you to log information about these activities, report it, and optionally create a mechanism to attempt to block it.

URL filtering This capability enhances the Azure Firewall’s FQDN filtering feature to consider an entire URL. For example, www.fabrikam.com/a/b instead of

www.fabrikam.com.Web categories This feature allows you to control user access to websites by categories such as gambling websites, social media websites, and others.

Now that you know the key components of the Azure Firewall, use the following steps to deploy and configure it:

Navigate to the Azure portal at https://portal.azure.com.

In the main dashboard, click Create A Resource.

Type firewall and click Firewall in the drop-down menu.

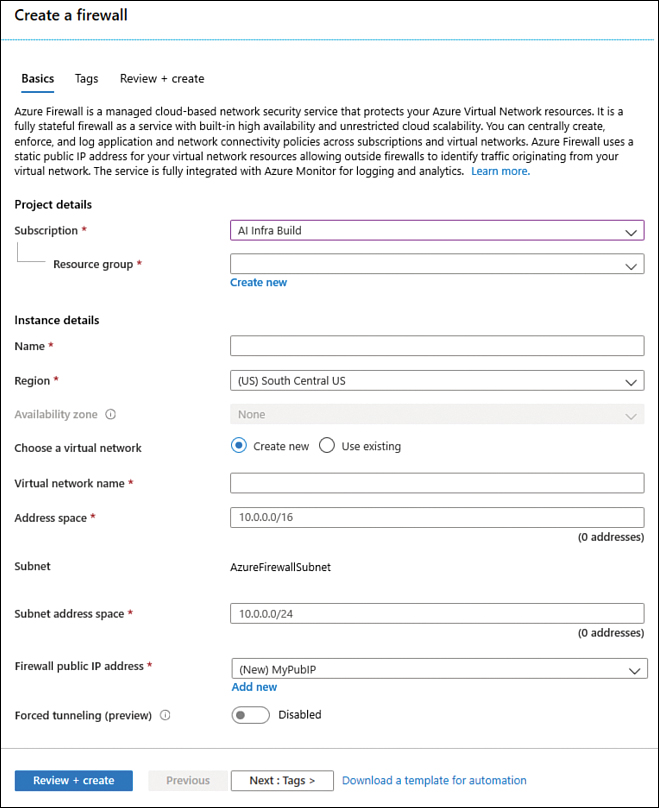

On the Firewall page, click the Create button, and the Create A Firewall blade appears, as shown in Figure 2-23.

If you have multiple subscriptions, make sure to click the Subscription drop-down menu and select the one that you want to use to deploy Azure Firewall.

In the Resource Group drop-down menu, select the resource group in which you want to deploy your Azure Firewall.

In the Instance Details section’s Name field, type the name for this Azure Firewall instance. There is a 50-character limit for the name.

In the Region drop-down menu, select the region where the Azure Firewall will reside.

In the Availability Zone drop-down menu, select the availability zone in which the firewall will reside.

FIGURE 2-23 Creating a new Azure Firewall

In the Firewall Tier you can select the plan you can use.

In the Firewall Management section, you can select the use of Firewall policy or classic Firewall rules. Keep in mind that if you use a Firewall policy, you will need to select an existing policy or create a new one.

For the Choose Virtual Network option, select Use Existing and select an existing VNet.

In the Virtual Network drop-down menu, select the VNet to which you want to deploy Azure Firewall.

In the Firewall Public IP Address field, select an existing unused public IP address or click Add New to create a new one in case all your public IPs are already allocated.

You can either enable or disable Force Tunneling. The default option is Disabled. By enabling this option, you are instructing Azure Firewall to route all Internet-bound traffic to a designated next hop instead of going directly to the Internet. Keep in mind that if you configure Azure Firewall to support forced tunneling, you can’t undo this configuration. Leave the default selection and click the Review + Create button.

The creation of the Azure Firewall will take several minutes. After the deployment is complete, you can click the Go To Resource button.

You can also deploy a new Azure Firewall using the New-AzFirewall cmdlet, as shown in the following example:

New-AzFirewall -Name "azFw" -ResourceGroupName MyRG -Location centralus -VirtualNetwork MyVNet -PublicIpAddress MyPubIP

Creating an application rule

Now that the Azure Firewall is created, you can start creating rules. To start, you are going to create an application rule to allow outbound access to www.bing.com. Follow these steps to create a rule:

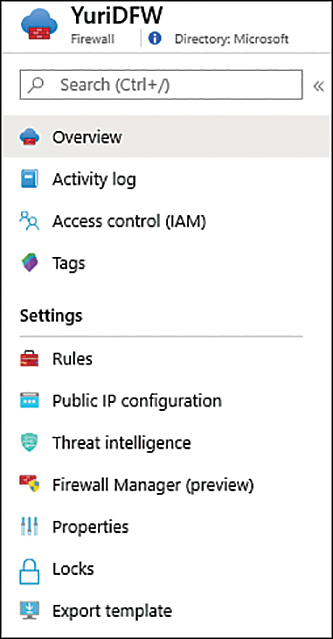

On the page that you have open for the firewall you created, click Rules, as shown in Figure 2-24.

FIGURE 2-24 Firewall options

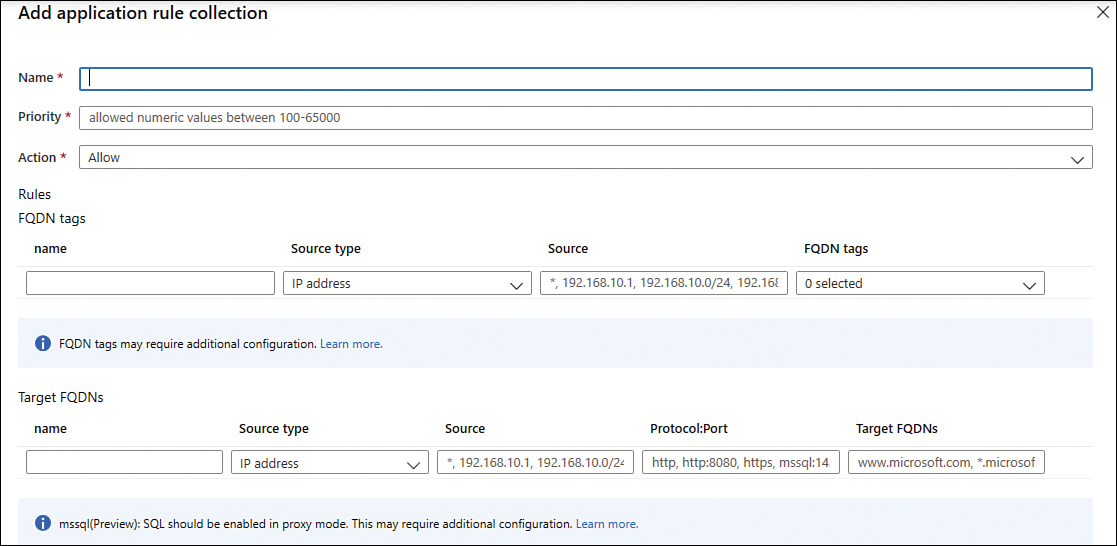

Click the Application Rule Collection tab and then click the + Add Application Rule Collection option. The Add Application Rule Collection page appears, as shown in Figure 2-25.

In the Name field, type a name for the rule; for this example, type Bing.

In the Priority field, type the priority for this rule; for this example, type 100.

In the Action drop-down menu, leave the default option (Allow).

FIGURE 2-25 Creating a new application rule collection

No changes are necessary in the FQDN Tags field.

In the Target FQDNs field, type AllowBing and leave the Source Type set to IP Address.

Type * in the Source field.

In the Protocol:Port field, type http,https.

In the Target FQDNs field, type www.bing.com.

Click the Add button.

In case you want to perform the same configuration using PowerShell, you can use the New-AzFirewallApplicationRule cmdlet, as shown here:

$MyAppRule = New-AzFirewallApplicationRule -Name AllowBing -SourceAddress * ' -Protocol http, https -TargetFqdn www.bing.com $AppCollectionRule = New-AzFirewallApplicationRuleCollection -Name App-Coll01 ' -Priority 100 -ActionType Allow -Rule $MyAppRule $Azfw.ApplicationRuleCollections = $AppRuleCollection Set-AzFirewall -AzureFirewall $Azfw

Creating a network rule

Creating a network rule is very similar to creating an application rule. For this example, you are going to create an outbound network rule that allows access to an external DNS Server. Follow these steps to create your network rule:

On the Firewalls rules page, click the Network Rule Collection tab.

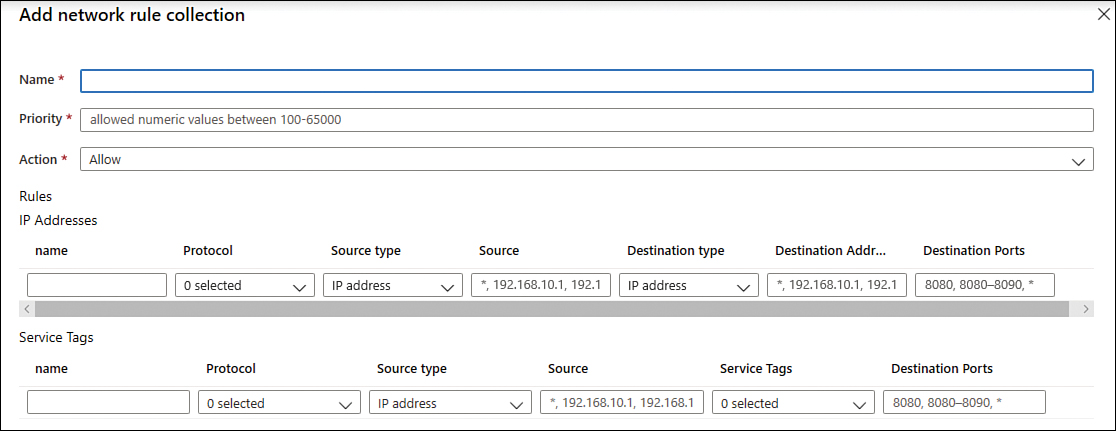

Click the Add Network Rule Collection option; the Add Network Rule Collection blade appears, as shown in Figure 2-26.

FIGURE 2-26 Creating a new network rule collection

In the Name field, type DNS.

In the Priority field, type 200.

In the Action field, leave the default selection (Allow).

Under the IP Addresses section, type DNSOutbound in the Name field.

Select UDP in the Protocol field.

Leave IP Address selection in the Source Type field.

In the Source field, type the range of your subnet, such as 10.30.0.0/24.

Leave the IP Address selection in the Destination Type field.

In the Destination Address field, type the IP address of the external DNS.

In the Destination Port, type 53.

Click the Add button.

In case you want to perform the same configuration using PowerShell, you can use the New-AzFirewallNetworkRule cmdlet, as shown here:

New-AzFirewallNetworkRule -Name "DNSOutbound" -Protocol UDP -SourceAddress "10.30.0.0/24" -DestinationAddress IP_of_the_DNSSErver -DestinationPort 53

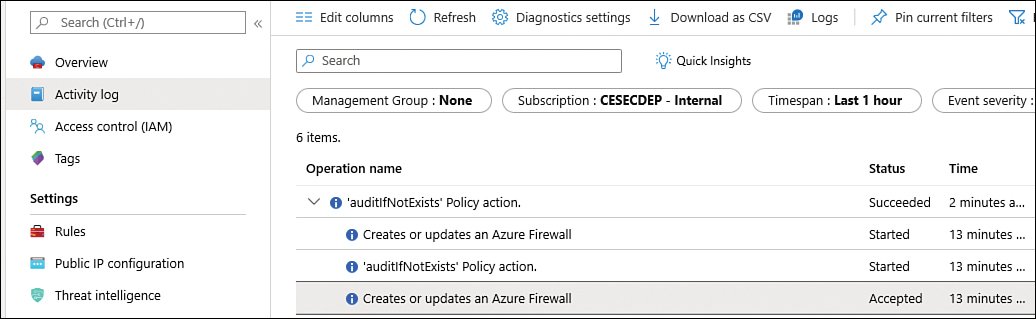

Firewall logs

When system admins need to audit configuration changes in the Azure Firewall, they should use Azure Activity logs. For example, the creation of those two rules (application and network) will appear in the Activity Log, which will look similar to Figure 2-27.

FIGURE 2-27 Activity logs showing the changes in the Azure Firewall

While these actions are automatically logged in the Azure Activity Log, the diagnostic logging for application and network rules are not enabled by default. You can also enable Firewall metrics. These metrics are collected every minute and can be useful for alerting because they can be sampled frequently. When you enable metrics collection, the following metrics will be available for Azure Firewall:

Application rules hit count

Network rules hit count

Data processed

Firewall health state

SNAT port utilization

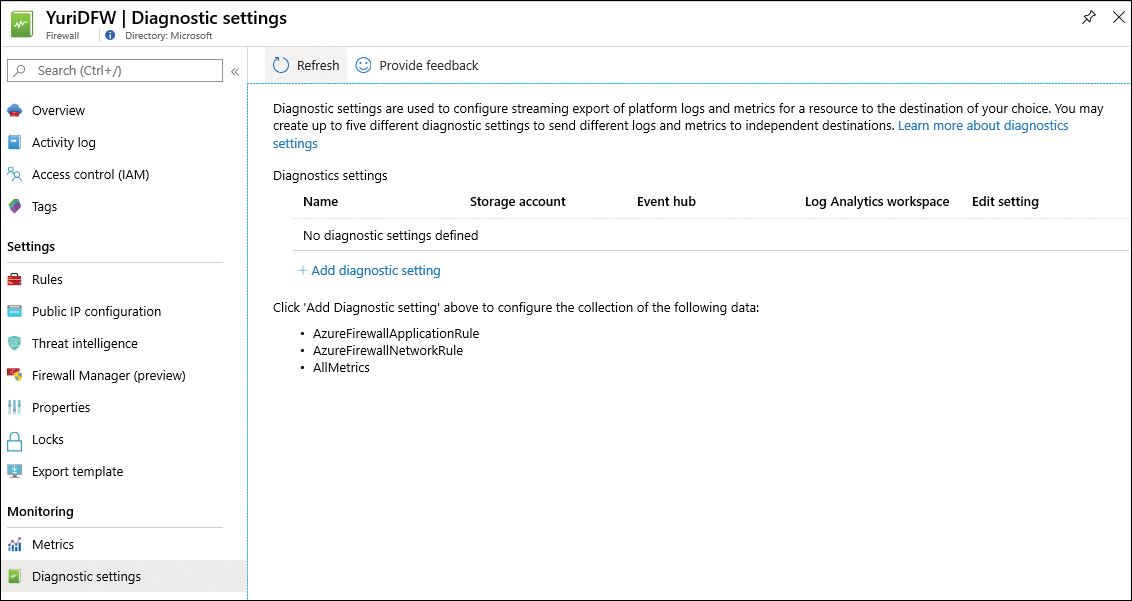

These metrics and the diagnostic logging for application and network rules can be enabled in the Azure Firewall dashboard. Use the following steps to enable these logs:

On the Firewalls page, in the left navigation pane, under the Monitoring section, click Diagnostic Settings. The Diagnostic Settings page appears, as shown in Figure 2-28.

FIGURE 2-28 Diagnostic settings page

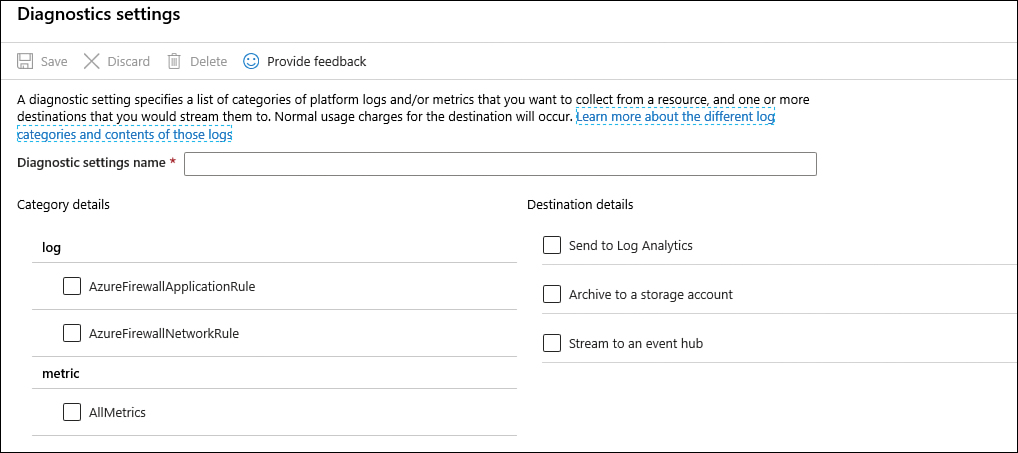

Click the Add Diagnostic Setting option, which makes the Diagnostic Settings blade appear, as shown in Figure 2-29.

FIGURE 2-29 Diagnostic Settings page

In the Diagnostic Settings Name field, type a name for this setting.

In the Log section, enable AzureFirewallApplicationRule and AzureFirewallNetworkRule.

In the Metric section, enable AllMetrics.

In the Destination Details section, you can choose where you want to send the logs: Log Analytics, Storage Account, or Event Hub. If you need to retain logs for a longer duration for review as needed, choosing Storage Account is the best option. If you need to send the logs to a security information and event management (SIEM) tool, the Event Hub is the best option. If you need more real-time monitoring, Log Analytics is a better fit. Notice that you can select multiple options, which allows you to address multiple needs.

For this example, select Send To Log Analytics, and select the workspace in which the logs will reside.

Click Save and once it is saved, close the blade.

Notice that the name of your logging configuration now appears on the Diagnostic Settings page.

You can use the

Set-AzDiagnosticSettingcmdlet to enable diagnostic logging, as shown in the following example:Set-AzDiagnosticSetting -ResourceId /subscriptions/<subscriptionId>/ resourceGroups/<resource group name>/providers/Microsoft.Network/ azureFirewalls/<Firewall name> ' -StorageAccountId /subscriptions/<subscriptionId>/resourceGroups/<resource group name>/providers/Microsoft.Storage/storageAccounts/<storage account name> ' -Enabled $true

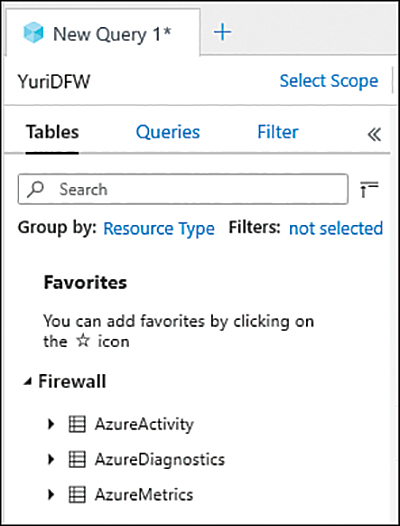

Now that the diagnostic logging is configured, click Logs in the left navigation pane in the Monitoring section. The Log Analytics workspace appears with the Azure Firewall schema, as shown in Figure 2-30.

FIGURE 2-30 Schema for the Azure Firewall in Log Analytics

To query on the Log Analytics workspace, you use Kusto Query Language (KQL). You can use the sample query to retrieve the logs that are related to the network rules:

AzureDiagnostics | where Category == "AzureFirewallNetworkRule"

Create and configure Azure Firewall Manager

Azure Firewall Manager can be used when the organization needs a security management solution that enables centralized security policy and route management. Azure Firewall Manager can provide this type of benefit for two types of Azure network architecture:

Secured virtual hub: this type of network is utilized when the organization uses an Azure Virtual WAN Hub to create hub-and-spoke architectures. When security and routing policies are associated with such a hub, it is referred to as a secured virtual hub.

Hub virtual network: this type of network is utilized when the organization is using an Azure virtual network that they create and manage on their own. When security policies are associated with such a hub, it is referred to as a hub virtual network.

When designing the architecture of your Azure network, consider the technical requirements of the scenario. If these requirements include one or more of the items shown below, then you should use Azure Firewall Manager:

Centralized deployment and configuration of multiple Azure Firewall instances that span through different Azure regions and subscriptions

Centralized management of Azure Firewall policies across multiple secured virtual hubs

Ability to integrate with third-party Security-as-a-Service (SECaaS) providers to obtain additional network protection for VNet and branch Internet connections

Ability to route traffic to a secured hub for filtering and logging purposes without having to manually set up User Defined Routes (UDR) on spoke virtual networks

One of the main components of Azure Firewall Manager is the Firewall policy. This policy contains NAT, network and application rule collections, and Threat Intelligence settings. A Firewall policy is a global resource that can be used across multiple Azure Firewall instances and across regions and subscriptions. You can create a policy using Azure portal, REST API, templates, Azure PowerShell, and CLI. You can also migrate existing rules from Azure Firewall using the portal or Azure PowerShell to create policies.

You can create new policies, or you can create a policy inherited from other existing policies. Policies created with non-empty parent policies inherit all rule collections from the parent policy. It is important to mention that when you inherit a policy, any changes to the parent policy will be automatically applied down to associated firewall child policies.

When taking the AZ-500 exam, make sure to carefully read the scenario description and the organization’s requirements. Depending on the organization’s requirements, you will either create an Azure Firewall Manager to a virtual hub or a hub virtual network.

If you need to secure your cloud network traffic destined to private IP addresses, Azure PaaS, and the Internet, then you should deploy Azure Firewall Manager to a virtual hub. If you need to connect your on-premises network to an Azure virtual network to create a hybrid network, you can create a hub virtual network. By deploying Azure Firewall Manager to this hub virtual network, you are securing your hybrid network traffic destined to private IP addresses, Azure PaaS, and the Internet.

The main use case scenario for Azure Firewall Manager is the centralized management of policies across multiple secured virtual hubs. Azure Firewall Manager supports both classic rules and policies, though when designing your deployment, we recommend that you use policies. Azure Firewall Manager also supports Standard and Premium policies. If your deployment needs any of the components below, you should choose Standard policy:

NAT rules, Network rules, Application rules

Custom DNS, DNS proxy

IP Groups

Web Categories

Threat Intelligence

More advanced deployments may require capabilities that will only be available in the Premium policies, which are: TLS Inspection, Web Categories, URL Filtering, and IDPS.

Another scenario supported by Azure Firewall Manager is to leverage third-party security as a service (SECaaS) offerings to protect Internet access for your users. By using this integration, you can secure a hub with a supported security partner. Also, you can route and filter Internet traffic from your Virtual Networks (VNets) or branch locations within a region. The supported security partners are Zscaler, Check Point, and iboss.

The general deployment steps will also vary according to the deployment selection. If you decided to deploy Azure Firewall Manager for hub virtual networks, the overall steps are shown below:

Create a Firewall policy.

Create a hub-and-spoke architecture.

Select the supported provider, which in this case only Azure Firewall is supported.

Configure the appropriate routes.

Create and configure Azure Front Door

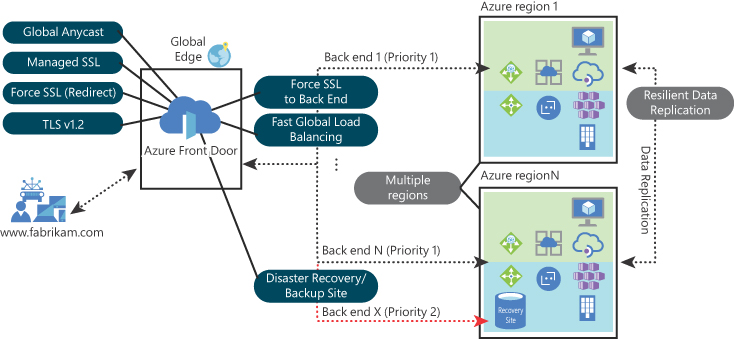

Consider an Azure deployment across different regions that needs to provide a high-performance experience for applications, and it is resilient to failures. For this type of scenario, Azure Front Door is the best solution.

Azure Front Door works at layer 7 (HTTP/HTTPS) and uses the anycast protocol with split TCP, plus Microsoft’s global network for improving global connectivity. By using split TCP-based anycast protocol, Front Door ensures that your users promptly connect to the nearest Front Door POP (point of presence).

You can configure Front Door to route your client requests to the fastest and most available application back end, which is any Internet-facing service hosted inside or outside of Azure. Some other capabilities included in Front Door are listed here:

Intelligent health probe Front Door monitors your back ends for availability and latency. According to its results, it will instant failover when a back end goes down.

URL-based routing Allows you to route traffic to the back end based on the URL’s path of the request. For example, traffic to

www.fabrikam.com/hr/*is routed to a specific pool, whereaswww.fabrikam.com/soc/*goes to another.Multiple-site hosting Enables you to configure a more efficient topology for your deployments by adding different websites to a single Front Door and redirecting to different pools.

Session affinity Uses cookie-based session affinity to keep the session in the same back end.

TLS termination Support for TLS termination at the edge.

Custom domain, SSL offloading, and certificate management You can let Front Door manage your certificate, or you can upload your own TLS/SSL certificate.

Application layer security Allows you to author your own custom web application firewall (WAF) rules for access control, and it comes with Azure DDoS Basic enabled. Front Door is also a layer 7 reverse proxy, which means it only allows web traffic to pass through to back ends and blocks other types of traffic by default.

URL redirection Allows you to configure different types of redirection, which includes HTTP to HTTPS redirection, redirection to different hostnames, redirection to different paths, or redirections to a new query string in the URL.

URL rewrite Allows you to configure a custom forwarding path to construct a request to forward traffic to the back end.

The diagram shown in Figure 2-31 reflects some of the features that were mentioned previously and gives you a better topology view of the main use case for Azure Front Door.

FIGURE 2-31 A sse case for Azure Front Door

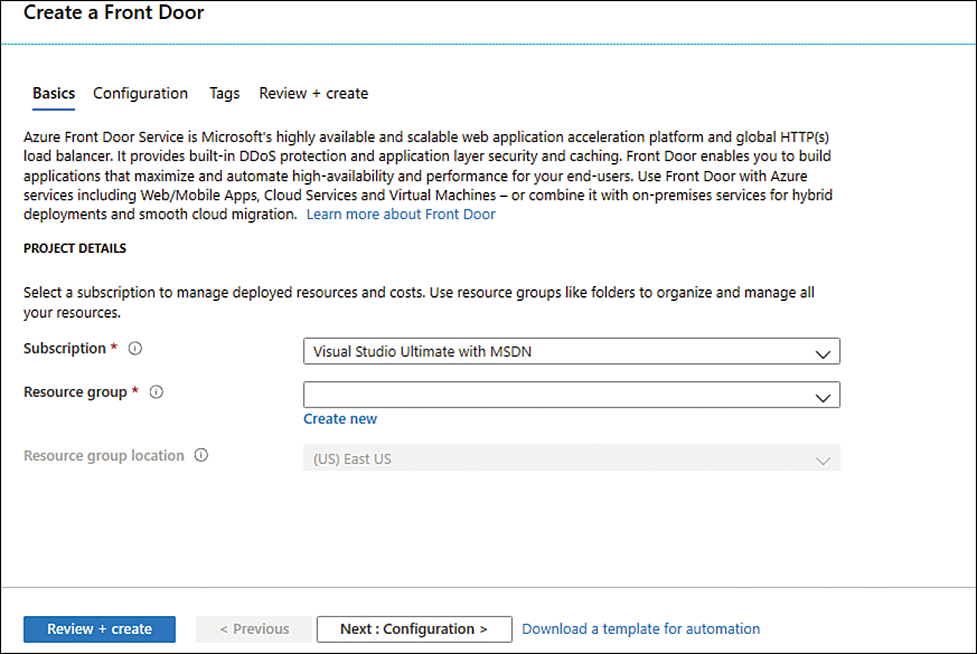

Follow the steps below to configure your Azure Front Door:

Navigate to the Azure portal at https://portal.azure.com.

In the search bar, type front and under Services, click Front Doors.

On the Front Doors page, click the Add button; the Create A Front Door page appears, as shown in Figure 2-32.

FIGURE 2-32 Azure Front Door creation page

In the Subscription drop-down menu, select the subscription that you want to use to create the Front Door.

In the Resource Group drop-down menu, select the resource group that you want for this Front Door.

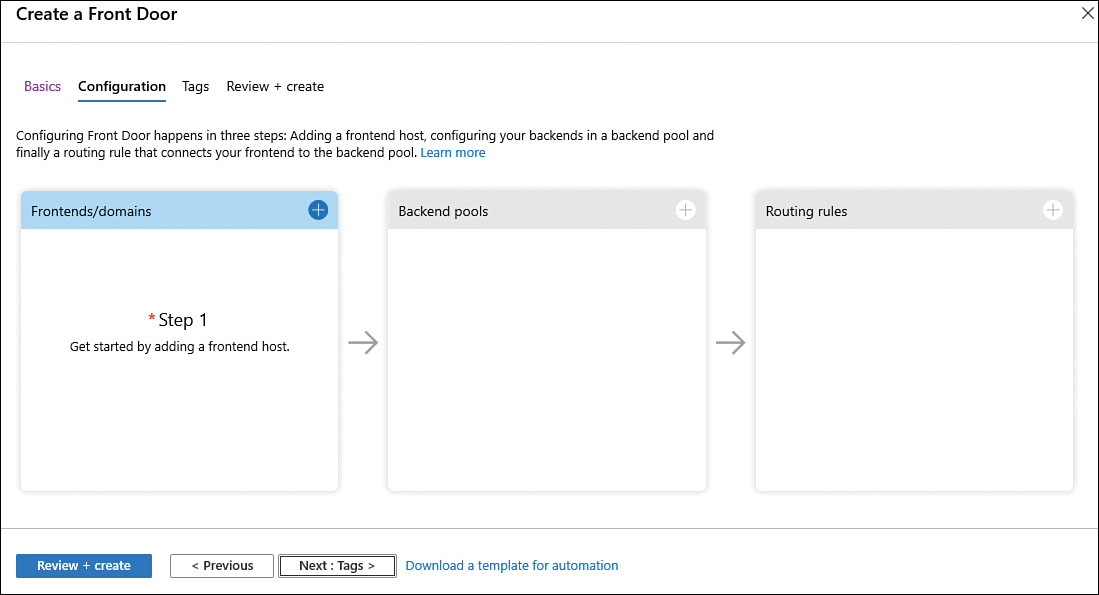

Click the Next: Configuration button; the Configuration tab appears, as shown in Figure 2-33.

FIGURE 2-33 Initial Front Door configuration

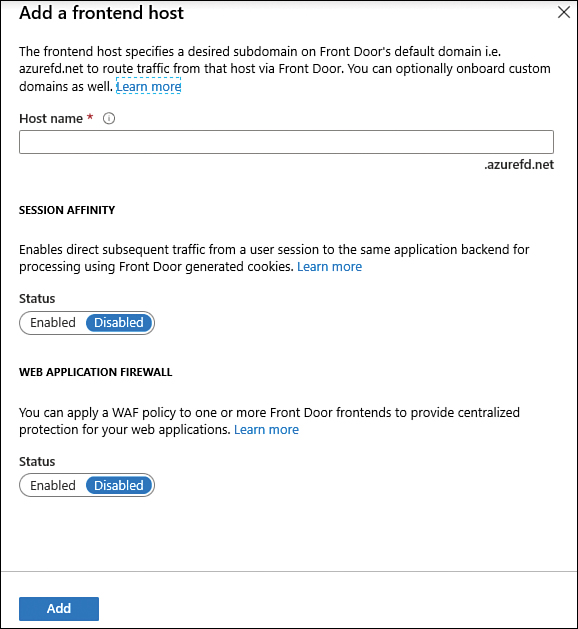

Click the plus sign (+) in the first square, Frontends/Domains; the Add Front End Host blade appears, as shown in Figure 2-34.

FIGURE 2-34 Add A Frontend Host

In the Host Name field, type a unique name for this front end.

Front Door forwards requests originating from the same client to different back ends based on load-balancing configuration, which means that Front Door doesn’t use session affinity by default. However, some stateful applications usually prefer that subsequent requests from the same user land on the same back end that processed the initial request. In this case, you need to enable session affinity. For this example, leave the default selection in Session Affinity (Enabled).

If you want to use Web Application Firewall (WAF) to protect your web application, you can take advantage of the centralized management provided by Front Door. For this example, leave the default Disabled setting for Web Application Firewall and click the Add button.

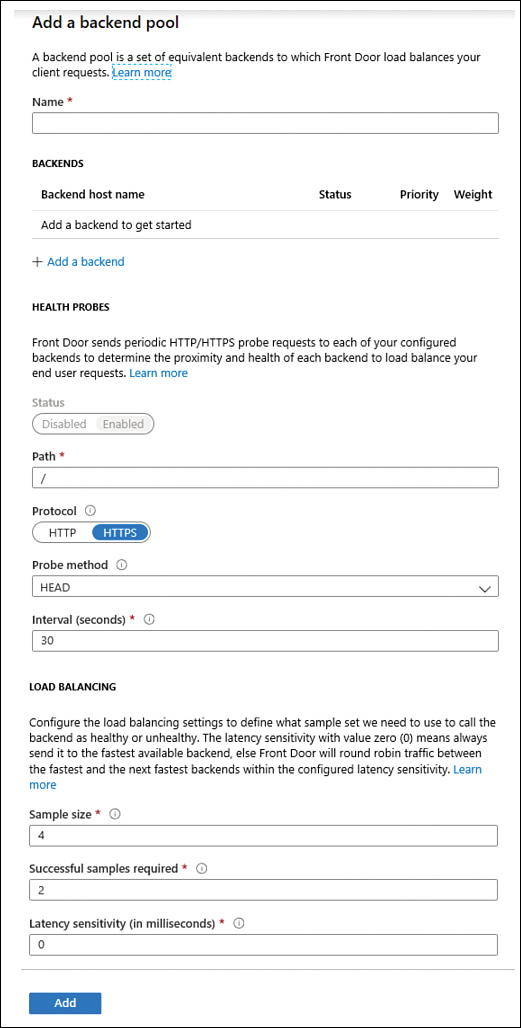

Click the plus sign (+) in the second square, Back End Pools; the Add Back End Pool blade appears, as shown in Figure 2-35.

In the Name field, type a unique name for the back-end pool.

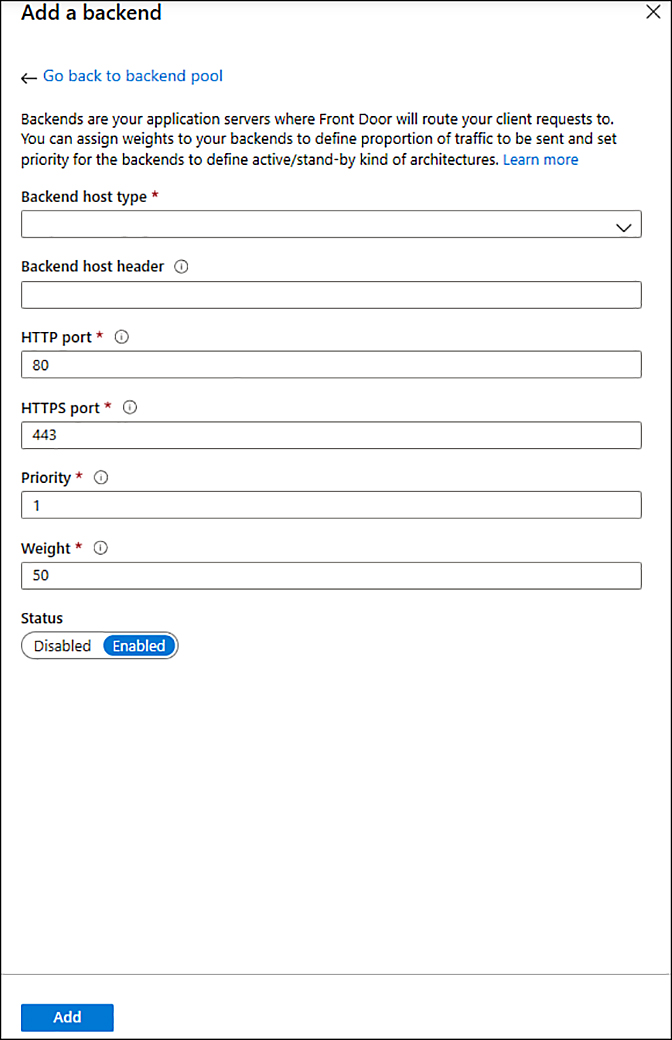

In the Back Ends section, click Add A Back End; the Add A Back End blade appears, as shown in Figure 2-36.

FIGURE 2-35 Add A Back End Pool

In the Back End Host Type drop-down menu, you can choose the type of resource you want to add. Select App Service in the drop-down menu.

Once you make this selection, the remaining parameters should be automatically filled with the default options. Review the values and click the Add button.

Now that you are back to the Add Back End Pool blade, review the options under the Health Probes section and notice that the default setting for Probe Method is

HEAD. TheHEADmethod is identical toGET; the difference is that the server must not return a message-body in the response. This is also the recommended setting to lower the load on your back ends (as well as the cost).

FIGURE 2-36 Configuring a new backend

The Load Balancing settings for the back-end pool define how health probes are evaluated. These settings are used to determine whether the back end is healthy or unhealthy. The Sample Size is used to determine how many sample health probes are necessary to consider the state of the back end (health evaluation). The Successful Samples Required is the threshold for how many samples must succeed to be considered successful. The Latency Sensitivity (in milliseconds) option is used when you want to send requests to back ends within the established latency measurement sensitivity range.

Leave the default selections and click the Add button.

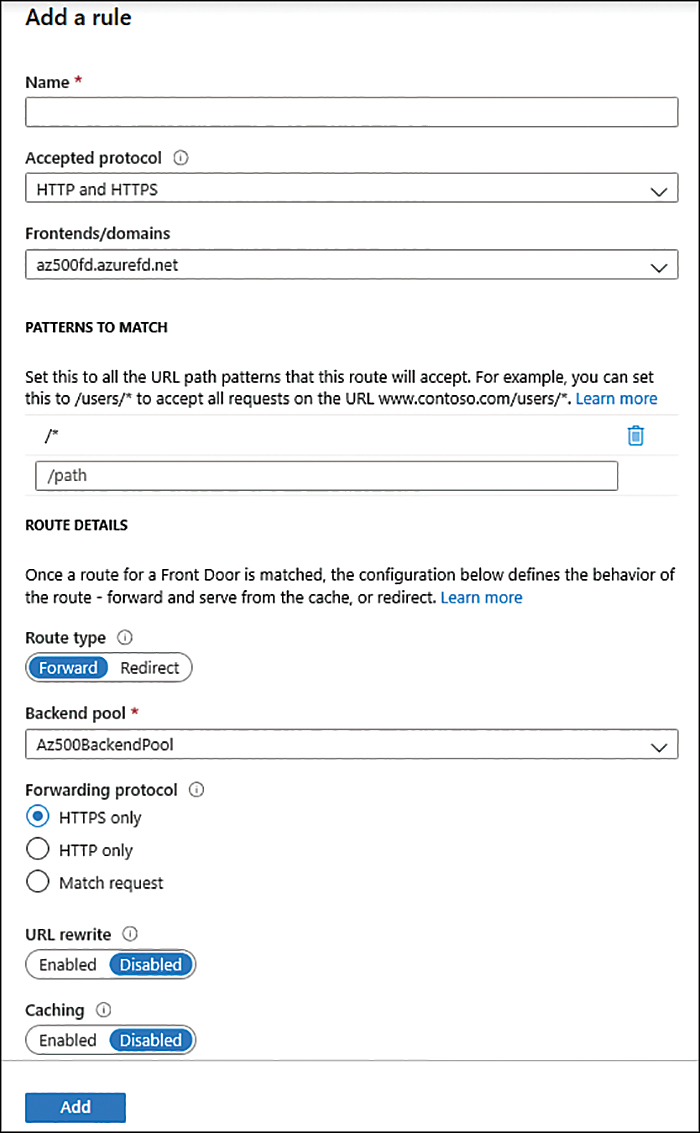

Click the plus sign (+) in the third square; Routing Rules; the Add Rule blade appears, as shown in Figure 2-37.

FIGURE 2-37 Adding a new rule

In the Name field, type a unique name for this routing rule.

Under the Patterns To Match section, you can add a specific pattern that you want to use. When Front Door is evaluating the request, it looks for any routing with an exact match on the host. If no exact front-end hosts match, it rejects the request and sends a 400 Bad Request error. After determining the specific front-end host, Front Door will filter the routing rules based on the requested path. For this example, leave the default selections.

Under the Route Details section, you can configure the behavior of the route. In the Route Type option, you can select whether you want to forward to the back-end pool or redirect to another place. For this example, leave this set to Forward, which is the default. Enable the URL Rewrite option if you want to create a custom forwarding path. The Caching option is disabled by default, which means that requests that match to this routing rule will not attempt to use cached content. In order words, requests will always fetch from the back end. Leave all the default selections in this section and click the Add button.

Click the Review + Create button, review the summary of your configuration, and click the Create button to finish.

Wait until the deployment is finished. Once it is finished, click the Go To Resource button to see the Front Door dashboard.

It will take a few minutes for the configuration to be deployed globally everywhere after you finish creating your Front Door.

Web application firewall

Web Application Firewall (WAF) can be used on Front Door. Azure also allows you to deploy WAF in other ways, so it is important to understand the design requirements before deciding which WAF deployment you should use.

Review the flowchart available at http://aka.ms/wafdecisionflow to better understand WAF’s features, which include Azure Load Balancer, Application Gateway, and Azure Front Door. If your scenario has the following characteristic, WAF with Front Door is a good choice:

Your app uses HTTP/HTTPS.

Your app is Internet-facing.

Your app is globally distributed across different regions.

Your app is hosted in PaaS (such as an Azure App Service).

Consider deploying WAF on Front Door when you need a global and centralized solution. When using WAF with Front Door, the web applications will be inspected for every incoming request delivered by Front Door at the network edge.

Create and configure Web Application Firewall (WAF)

In a scenario where you need to protect your web applications from common threats, such as SQL injection, cross-site scripting, and other web-based exploits, using Azure Web Application Firewall (WAF) on Azure Application Gateway is the most appropriate way to address these needs. WAF on Application Gateway is based on Open Web Application Security Project (OWASP) core rule set 3.1, 3.0, or 2.2.9. These rules will be used to protect your web apps against the top 10 OWASP vulnerabilities, which you can find at https://owasp.org/www-project-top-ten.

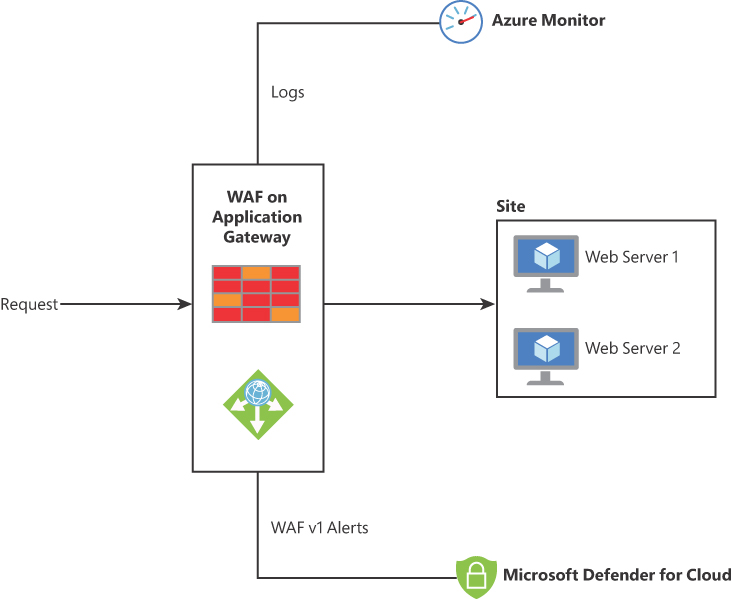

You can use WAF on Application Gateway to protect multiple web applications. A single instance of Application Gateway can host up to 40 websites, and those websites will be protected by a WAF. Even though you have multiple websites behind the WAF, you can still create custom policies to address the needs of those sites. The diagram shown in Figure 2-38 has more details about the different components of this solution.

FIGURE 2-38 Different integration components for WAF on Application Gateway

In the example shown in Figure 2-38, a WAF Policy has been configured for the back-end site. This policy is where you define all rules, custom rules, exclusions, and other customizations, such as a file upload limit.

WAF on Application Gateway supports Transport Layer Security (TLS) termination, cookie-based session affinity, round-robin load distribution, and content-based routing. The diagram shown in Figure 2-38 also highlights the integration with Azure Monitor, which will receive all logs related to potential attacks against your web applications. WAV v1 alerts will also be streamed to Microsoft Defender for Cloud, and they will appear in the Security Alert dashboard.

Depending on the scenario requirement, you can configure WAF on the Application Gateway to operate in two different modes:

Detection mode This mode will not interfere with traffic when suspicious activity occurs. Rather than blocking suspicious activity, this mode only detects and logs all threat alerts. For this mode to work properly, diagnostic logging and the WAF log must be enabled.

Prevention mode As the name implies, this mode blocks traffic that matches the rules. Blocked requested generate a 403 Unauthorized Access message. At that point, the connection is closed, and a record is created in the WAF logs.

When reviewing the WAF log for a request that was blocked, you will see a message that contains some fields that are similar to this example:

Mandatory rule. Cannot be disabled. Inbound Anomaly Score Exceeded (Total Inbound Score: 5 - SQLI=0,XSS=0,RFI=0,LFI=0,RCE=0,PHPI=0,HTTP=0,SESS=0): Missing User Agent Header; individual paranoia level scores: 3, 2, 0, 0

The anomaly score comes from the OWASP 3.x rules, which have a specific severity: Critical, Error, Warning, or Notice. The previous message indicates that the total inbound score is 5, which translates to a severity equal to Critical. It is important to emphasize that the traffic will not be blocked until it reaches the threshold, which is 5. This means that if traffic matches the block rule but has an anomaly score of 3, it will not be blocked, though the message that you will see in the WAF log says that it is blocked. The severity levels are 5 (Critical), 4 (Error), 3 (Warning), and 2 (Notice).

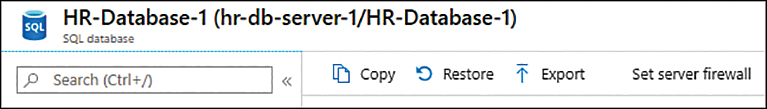

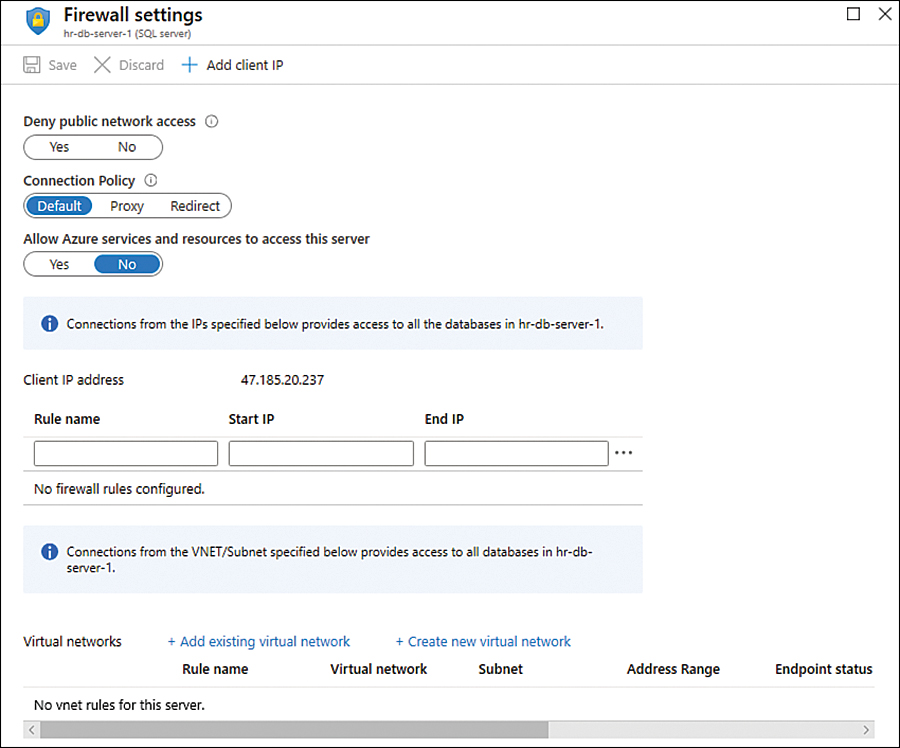

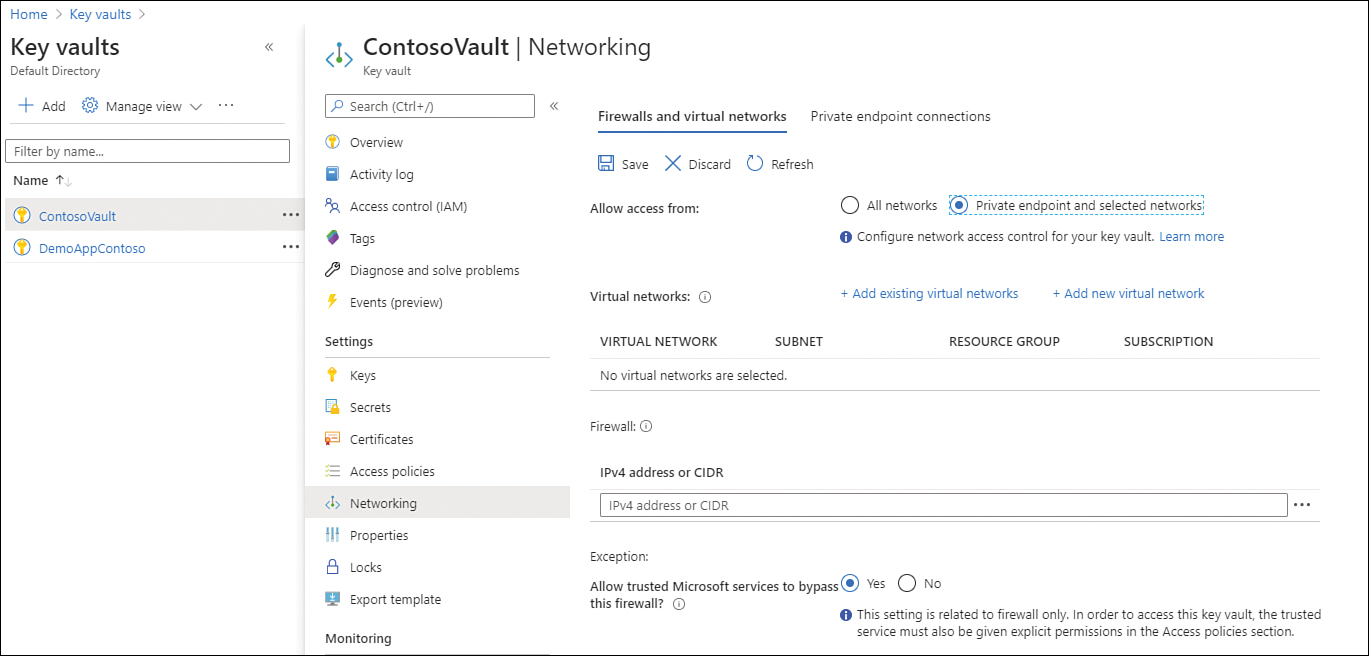

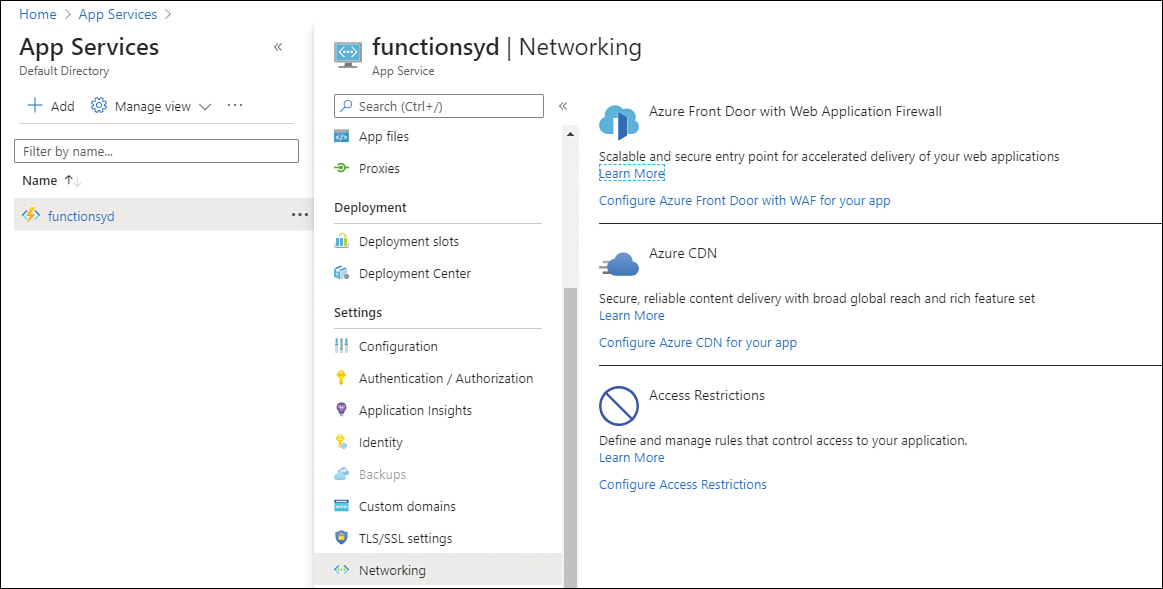

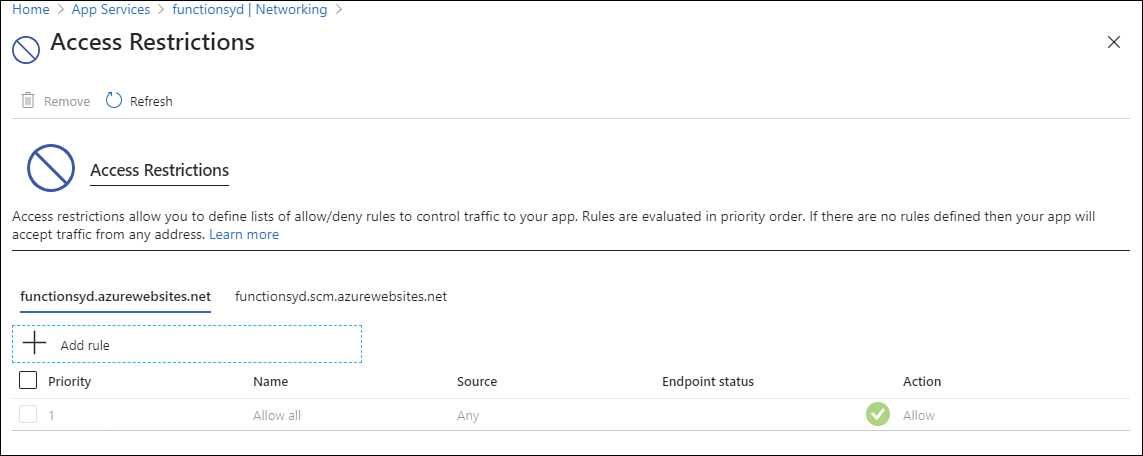

Configure resource firewall

In addition to Azure Firewall, you can also leverage the native firewall-related capabilities for different services. Azure Storage and SQL Database are examples of Azure services that have this functionality.

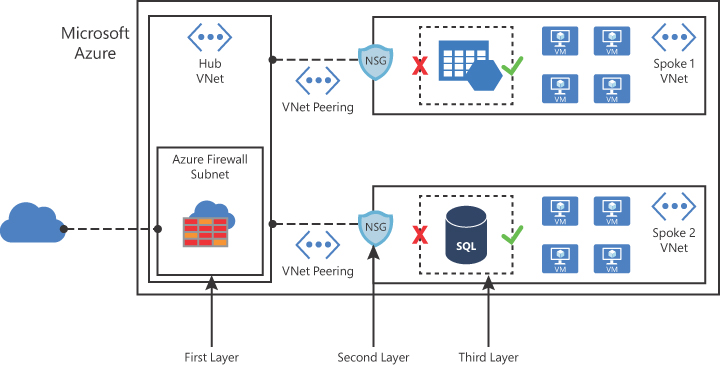

When you leverage this built-in functionality to harden your resources, you are adding an extra layer of security to your workload and following the defense in depth strategy, as shown in Figure 2-39.

FIGURE 2-39 Multiple layers of protection to access the resource

Azure storage firewall

When you enable this feature in Azure Storage, you can better control the level of access to your storage accounts based on the type and subset of networks used. When network rules are configured, only applications requesting data over the specified set of networks can access a storage account.